AWS – API Gateway

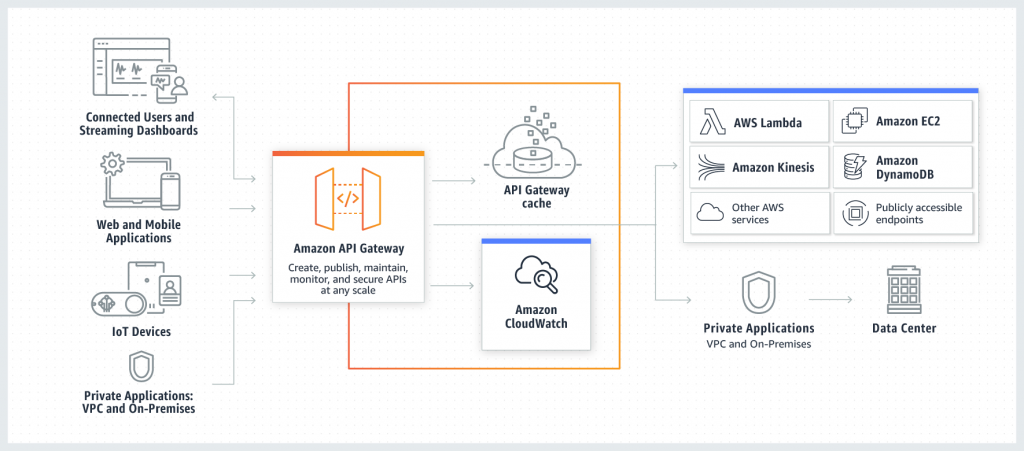

Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. API Gateway handles all the tasks involved in accepting and processing up to hundreds of thousands of concurrent API calls, including traffic management, authorization and access control, monitoring, and API version management. API Gateway has no minimum fees or startup costs. You pay only for the API calls you receive and the amount of data transferred out and, with the API Gateway tiered pricing model, you can reduce your cost as your API usage scales.

AWS API Gateway Developer Guide

August 5, 2019AWS – Lambda

AWS Lambda lets you run code without provisioning or managing servers. You pay only for the compute time you consume – there is no charge when your code is not running. With Lambda, you can run code for virtually any type of application or backend service – all with zero administration. Just upload your code and Lambda takes care of everything required to run and scale your code with high availability. You can set up your code to automatically trigger from other AWS services or call it directly from any web or mobile app.

You can use AWS Lambda to run your code in response to events, such as changes to data in an Amazon S3 bucket or an Amazon DynamoDB table; to run your code in response to HTTP requests using Amazon API Gateway; or invoke your code using API calls made using AWS SDKs. With these capabilities, you can use Lambda to easily build data processing triggers for AWS services like Amazon S3 and Amazon DynamoDB, process streaming data stored in Kinesis, or create your own back end that operates at AWS scale, performance, and security.

When to use Lambda?

- When you just need to focus on your application and not maintain servers which most companies would like to do

- When using AWS Lambda, you are responsible only for your code. AWS Lambda manages the compute fleet that offers a balance of memory, CPU, network, and other resources. This is in exchange for flexibility, which means you cannot log in to compute instances, or customize the operating system or language runtime.

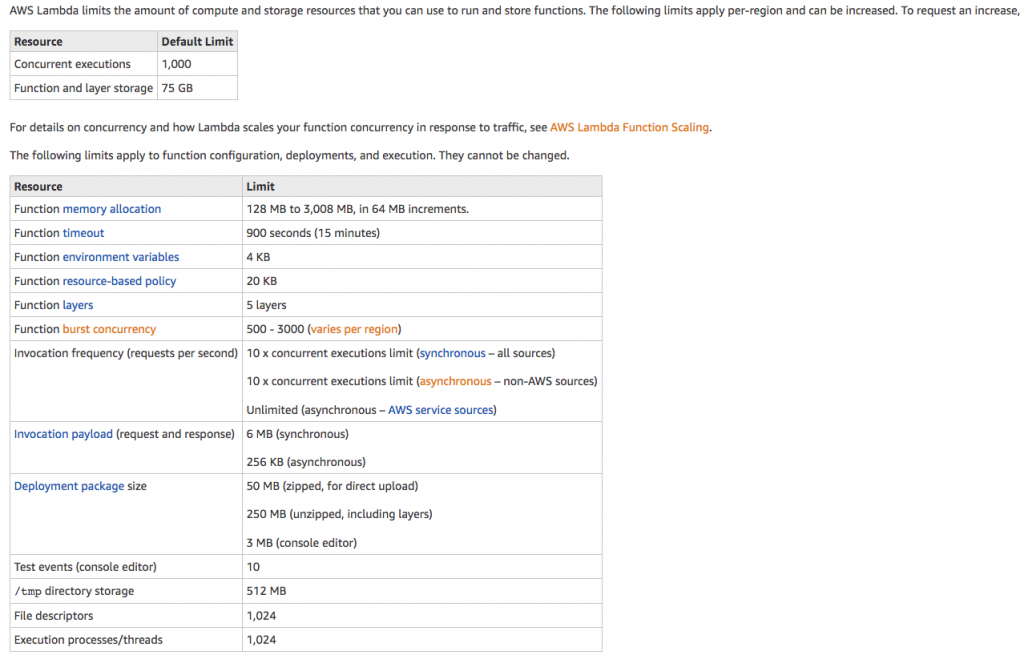

- Maximum execution duration per request is set to 300 seconds.

- Lambda function deployment package size, which is set to 50MB (compressed), and the non-persistent scratch area available for the function to use – 500MB.

- The cold start takes some time for the Lambda function to handle the first request because Lambda has to start a new instance of the function. The cold start can be a real problem for your function if at any time you expect fast response and there are no active instances of your function. The latter can happen for low traffic scenarios when AWS terminates instances of your function when there have been no requests for a long time. One workaround is to send a request periodically to avoid the cold start and to make sure that there is always an active instance, ready to serve requests.

- Lambda functions write their logs to CloudWatch, which currently is the only tool to troubleshoot and monitor your functions.

- Lambda functions are short-lived, therefore they need to persist their state somewhere. Available options include using DynamoDB or RDS tables, which require fixed payments per month.

- You can invoke AWS Lambda functions over HTTPS. You can do this by defining a custom REST API and endpoint using Amazon API Gateway, and then mapping individual methods, such as

GETandPUT, to specific Lambda functions. Alternatively, you could add a special method named ANY to map all supported methods (GET,POST,PATCH,DELETE) to your Lambda function. When you send an HTTPS request to the API endpoint, the Amazon API Gateway service invokes the corresponding Lambda function. - Amazon API Gateway invokes your function synchronously with an event that contains details about the HTTP request that it received.

- Amazon API Gateway also adds a layer between your application users and your app logic that has the bility to throttle individual users or requests, protect against Distributed Denial of Service attacks. and provide a caching layer to cache response from your Lambda function.

- Amazon API Gateway cannot invoke your Lambda function without your permission. You grant this permission via the permission policy associated with the Lambda function. Lambda also needs permission to call other AWS services like S3 or DynamoDB.

- An Amazon API Gateway is a collection of resources and methods. For this tutorial, you create one resource (

DynamoDBManager) and define one method (POST) on it. The method is backed by a Lambda function (LambdaFunctionOverHttps). That is, when you call the API through an HTTPS endpoint, Amazon API Gateway invokes the Lambda function. - Pass through the entire request – A Lambda function can receive the entire HTTP request (instead of just the request body) and set the HTTP response (instead of just the response body) using the

AWS_PROXYintegration type. - Catch-all methods – Map all methods of an API resource to a single Lambda function with a single mapping, using the

ANYcatch-all method. - Catch-all resources – Map all sub-paths of a resource to a Lambda function without any additional configuration using the new path parameter (

{proxy+}).

outputType handler-name(inputType input, Context context) {

…

}

import com.amazonaws.services.lambda.runtime.Context;

import com.amazonaws.services.lambda.runtime.RequestHandler;

public class HelloPojo implements RequestHandler<RequestClass, ResponseClass>{

public ResponseClass handleRequest(RequestClass request, Context context){

String greetingString = String.format("Hello %s, %s.", request.firstName, request.lastName);

return new ResponseClass(greetingString);

}

}

AWS – CloudFront

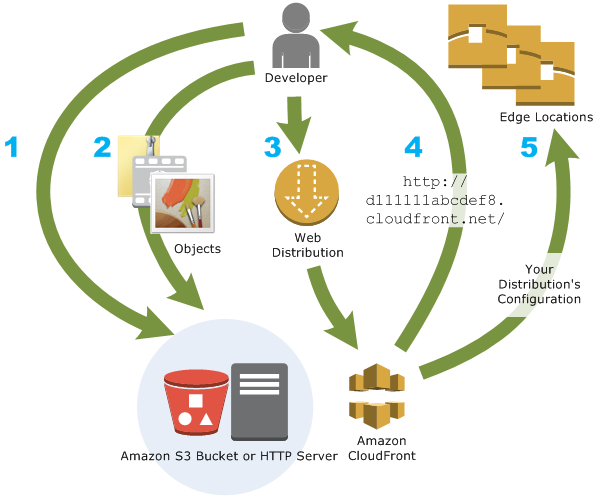

Amazon CloudFront is a fast content delivery network (CDN) service that securely delivers data, videos, applications, and APIs to customers globally with low latency, high transfer speeds, all within a developer-friendly environment. CloudFront is integrated with AWS – both physical locations that are directly connected to the AWS global infrastructure, as well as other AWS services. CloudFront works seamlessly with services including AWS Shield for DDoS mitigation, Amazon S3, Elastic Load Balancing or Amazon EC2 as origins for your applications, and Lambda@Edge to run custom code closer to customers’ users and to customize the user experience. Lastly, if you use AWS origins such as Amazon S3, Amazon EC2 or Elastic Load Balancing, you don’t pay for any data transferred between these services and CloudFront.

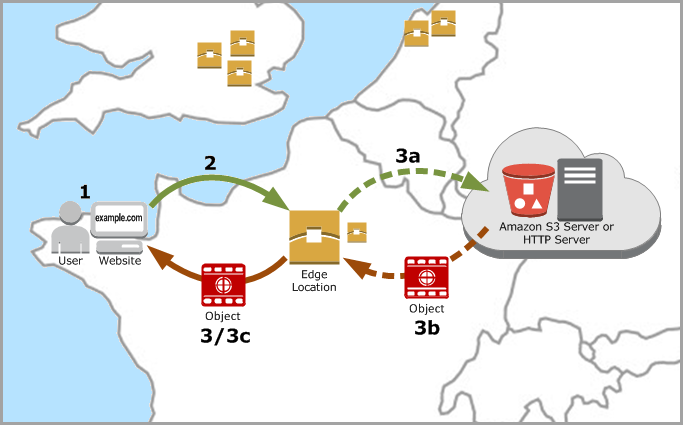

Amazon CloudFront is a web service that speeds up distribution of your static and dynamic web content, such as .html, .css, .js, and image files, to your users. CloudFront delivers your content through a worldwide network of data centers called edge locations. When a user requests content that you’re serving with CloudFront, the user is routed to the edge location that provides the lowest latency (time delay), so that content is delivered with the best possible performance.

- If the content is already in the edge location with the lowest latency, CloudFront delivers it immediately.

- If the content is not in that edge location, CloudFront retrieves it from an origin that you’ve defined—such as an Amazon S3 bucket, a MediaPackage channel, or an HTTP server (for example, a web server) that you have identified as the source for the definitive version of your content.

Use cases:

- Accelerate static website content delivery – CloudFront can speed up the delivery of your static content (for example, images, style sheets, JavaScript, and so on) to viewers across the globe.

- Serve on-demand or live streaming video – you can cache media fragments at the edge, so that multiple requests for the manifest file that delivers the fragments in the right order can be combined, to reduce the load on your origin server.

- Encrypt Specific Fields Throughout System Processing – When you configure HTTPS with CloudFront, you already have secure end-to-end connections to origin servers. When you add field-level encryption, you can protect specific data throughout system processing in addition to HTTPS security, so that only certain applications at your origin can see the data.

- Customize at the Edge – Running serverless code at the edge opens up a number of possibilities for customizing the content and experience for viewers, at reduced latency. For example, you can return a custom error message when your origin server is down for maintenance, so viewers don’t get a generic HTTP error message. Or you can use a function to help authorize users and control access to your content, before CloudFront forwards a request to your origin.

- Serve Private Content by using Lambda@Edge Customizations – Using Lambda@Edge can help you configure your CloudFront distribution to serve private content from your own custom origin, as an option to using signed URLs or signed cookies.

How CloudFront Delivers Content to Your Users

- A user accesses your website or application

- DNS routes the request to the CloudFront POP (edge location) that can best serve the request—typically the nearest CloudFront POP in terms of latency—and routes the request to that edge location.

- CloudFront checks its cache for the requested files. If the files are in the cache, CloudFront returns them to the user. If the files are not in the cache, it calls the origin server.

When you deploy resources to a S3 bucket that uses Cloudfront for distribution, you need to invalidate those resources so they can reflect new changes.

Use CLI

aws cloudfront –profile awsProfile create-invalidation –distribution-id distribution_id –paths “/*”

Example: aws cloudfront –profile folauk110 create-invalidation –distribution-id 123321test –paths “/*”

AWS Cloudfront Developer Guide

AWS – S3

Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance. This means customers of all sizes and industries can use it to store and protect any amount of data for a range of use cases, such as websites, mobile applications, backup and restore, archive, enterprise applications, IoT devices, and big data analytics. Amazon S3 provides easy-to-use management features so you can organize your data and configure finely-tuned access controls to meet your specific business, organizational, and compliance requirements. Amazon S3 is designed for 99.999999999% (11 9’s) of durability, and stores data for millions of applications for companies all around the world.

Use cases:

- Backup and restore

- Disaster recovery

- Archive

- Data lakes and big data analytics

- Hybrid cloud storage

- Cloud-native application data

Overview of S3

Amazon S3 has a simple web services interface that you can use to store and retrieve any amount of data, at any time, from anywhere on the web.

S3 Bucket

S3 Bucket Restrictions and Limitations

- By default, you can create up to 100 buckets per account. You can go more than 100 buckets per account by submitting a support ticket. You can increase your account bucket limit to a maximum of 1,000 buckets.

- Bucket ownership is not transferable; however, if a bucket is empty, you can delete it. After a bucket is deleted, the name becomes available to reuse, but the name might not be available for you to reuse for various reasons. For example, some other account could create a bucket with that name. Note, too, that it might take some time before the name can be reused. So if you want to use the same bucket name, don’t delete the bucket.

- There is no limit to the number of objects that can be stored in a bucket and no difference in performance whether you use many buckets or just a few. You can store all of your objects in a single bucket, or you can organize them across several buckets.

- After you have created a bucket, you can’t change its Region.

- You cannot create a bucket within another bucket.

- If your application automatically creates buckets, choose a bucket naming scheme that is unlikely to cause naming conflicts. Ensure that your application logic will choose a different bucket name if a bucket name is already taken.

- After you create an S3 bucket, you can’t change the bucket name, so choose the name wisely.

Rules for bucket naming

- Bucket names must be unique across all existing bucket names in Amazon S3.

- Bucket names must comply with DNS naming conventions.

- Bucket names must be at least 3 and no more than 63 characters long.

- Bucket names must not contain uppercase characters or underscores.

- Bucket names must start with a lowercase letter or number.

- Bucket names must not be formatted as an IP address (for example, 192.168.5.4).

When you use server-side encryption, Amazon S3 encrypts an object before saving it to disk in its data centers and decrypts it when you download the objects.

- Key – The name that you assign to an object. You use the object key to retrieve the object.

- Version Id – Within a bucket, a key and version ID uniquely identify an object. The version ID is a string that Amazon S3 generates when you add an object to a bucket.

- Value – The content that you are storing. An object value can be any sequence of bytes. Objects can range in size from zero to 5 TB.

- Metadata – A set of name-value pairs with which you can store information regarding the object. You can assign metadata, referred to as user-defined metadata, to your objects in Amazon S3. Amazon S3 also assigns system-metadata to these objects, which it uses for managing objects.

- Subresources – Amazon S3 uses the subresource mechanism to store object-specific additional information.

- Access Control Information – You can control access to the objects you store in Amazon S3. Amazon S3 supports both the resource-based access control, such as an access control list (ACL) and bucket policies and user-based access control. Because subresources are subordinates to objects, they are always associated with some other entity such as an object or a bucket

Object key (or key name) uniquely identifies the object in a bucket. Object metadata is a set of name-value pairs. You can set object metadata at the time you upload it. After you upload the object, you cannot modify object metadata. The only way to modify object metadata is to make a copy of the object and set the metadata.

The Amazon S3 data model is a flat structure: you create a bucket, and the bucket stores objects. There is no hierarchy of sub buckets or subfolders. However, you can infer logical hierarchy using key name prefixes and delimiters as the Amazon S3 console does. The Amazon S3 console supports a concept of folders.

Amazon S3 supports buckets and objects, and there is no hierarchy in Amazon S3. However, the prefixes and delimiters in an object key name enable the Amazon S3 console and the AWS SDKs to infer hierarchy and introduce the concept of folders.

System Metadata – For each object stored in a bucket, Amazon S3 maintains a set of system metadata. Amazon S3 processes this system metadata as needed. For example, Amazon S3 maintains object creation date and size metadata and uses this information as part of object management.

User-defined Metadata – When uploading an object, you can also assign metadata to the object.

AmazonS3 s3Client = AmazonS3ClientBuilder.standard()

.withCredentials(new ProfileCredentialsProvider())

.withRegion(clientRegion)

.build();

if (!s3Client.doesBucketExistV2(bucketName)) {

// Because the CreateBucketRequest object doesn't specify a region, the

// bucket is created in the region specified in the client.

s3Client.createBucket(new CreateBucketRequest(bucketName));

// Verify that the bucket was created by retrieving it and checking its location.

String bucketLocation = s3Client.getBucketLocation(new GetBucketLocationRequest(bucketName));

System.out.println("Bucket location: " + bucketLocation);

}

AmazonS3 s3Client = AmazonS3ClientBuilder.standard()

.withRegion(clientRegion)

.build();

// Upload a text string as a new object.

s3Client.putObject(bucketName, stringObjKeyName, "Uploaded String Object");

// Upload a file as a new object with ContentType and title specified.

PutObjectRequest request = new PutObjectRequest(bucketName, fileObjKeyName, new File(fileName));

ObjectMetadata metadata = new ObjectMetadata();

metadata.setContentType("plain/text");

metadata.addUserMetadata("x-amz-meta-title", "someTitle");

request.setMetadata(metadata);

s3Client.putObject(request);

You need to santize your key names because they must be presentable as urls. Read here about that.

So I have a method to strip out invalid characters from file name because creating a key off of it.

public static String replaceInvalidCharacters(String fileName) {

/**

* Valid characters<br>

* alphabets a-z <br>

* digits 0-9 <br>

* underscore _ <br>

* dash - <br>

*

*/

String alphaAndDigits = "[^a-zA-Z0-9._-]+";

// remove invalid characters

String newFileName = fileName.replaceAll(alphaAndDigits, "");

return newFileName;

}

AmazonS3 s3Client = AmazonS3ClientBuilder.standard()

.withRegion(clientRegion)

.withCredentials(new ProfileCredentialsProvider())

.build();

// Get an object and print its contents.

System.out.println("Downloading an object");

fullObject = s3Client.getObject(new GetObjectRequest(bucketName, key));

System.out.println("Content-Type: " + fullObject.getObjectMetadata().getContentType());

System.out.println("Content: ");

displayTextInputStream(fullObject.getObjectContent());

// Get a range of bytes from an object and print the bytes.

GetObjectRequest rangeObjectRequest = new GetObjectRequest(bucketName, key)

.withRange(0, 9);

objectPortion = s3Client.getObject(rangeObjectRequest);

System.out.println("Printing bytes retrieved.");

displayTextInputStream(objectPortion.getObjectContent());

// Get an entire object, overriding the specified response headers, and print the object's content.

ResponseHeaderOverrides headerOverrides = new ResponseHeaderOverrides()

.withCacheControl("No-cache")

.withContentDisposition("attachment; filename=example.txt");

GetObjectRequest getObjectRequestHeaderOverride = new GetObjectRequest(bucketName, key)

.withResponseHeaders(headerOverrides);

headerOverrideObject = s3Client.getObject(getObjectRequestHeaderOverride);

displayTextInputStream(headerOverrideObject.getObjectContent());

AmazonS3 s3Client = AmazonS3ClientBuilder.standard()

.withRegion(clientRegion)

.withCredentials(new ProfileCredentialsProvider())

.build();

// Set the presigned URL to expire after one hour.

java.util.Date expiration = new java.util.Date();

long expTimeMillis = expiration.getTime();

expTimeMillis += 1000 * 60 * 60;

expiration.setTime(expTimeMillis);

// Generate the presigned URL.

System.out.println("Generating pre-signed URL.");

GeneratePresignedUrlRequest generatePresignedUrlRequest =

new GeneratePresignedUrlRequest(bucketName, objectKey)

.withMethod(HttpMethod.GET)

.withExpiration(expiration);

URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest);

System.out.println("Pre-Signed URL: " + url.toString());

AmazonS3 s3Client = AmazonS3ClientBuilder.standard()

.withRegion(clientRegion)

.withCredentials(new ProfileCredentialsProvider())

.build();

// Set the presigned URL to expire after one hour.

java.util.Date expiration = new java.util.Date();

long expTimeMillis = expiration.getTime();

expTimeMillis += 1000 * 60 * 60;

expiration.setTime(expTimeMillis);

// Generate the presigned URL.

System.out.println("Generating pre-signed URL.");

GeneratePresignedUrlRequest generatePresignedUrlRequest =

new GeneratePresignedUrlRequest(bucketName, objectKey)

.withMethod(HttpMethod.GET)

.withExpiration(expiration);

URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest);

System.out.println("Pre-Signed URL: " + url.toString());

How to create a S3 bucket from AWS CLI

aws s3 mb s3://mybucket

aws s3 cp test.txt s3://mybucket/test2.txt --expires 2014-10-01T20:30:00Z

aws s3 mv test.txt s3://mybucket/test2.txt // upload all contents within the current directory to mybucket aws s3 mv . s3://mybucket

Download files from a S3 bucket

aws s3 mv s3://mybucket/test.txt test2.txt //download all files in mybucket to a local director(local_mybucket) aws s3 mv s3://mybucket local_mybucket --recursive

Sync files

// sync(upload all files) within the current directory to S3 mybucket aws s3 sync s3://mybucket . // sync mybucket to mybucket2 aws s3 sync s3://mybucket s3://mybucket2 // download all content of mybucket to the current directory aws s3 sync s3://mybucket . --recursive //any files existing in the local directory but not existing in bucket will be deleted. aws s3 sync . s3://mybucket --delete // all files matching the pattern existing both in s3 and locally will be excluded from the sync. aws s3 sync . s3://mybucket --exclude "*.jpg"

List s3 buckets within your account

aws s3api list-buckets //The query option filters the output of list-buckets down to only the bucket names. aws s3api list-buckets --query "Buckets[].Name"

aws s3api list-objects --bucket bucketName // get objects that start with (--prefix) aws s3api list-objects --bucket sidecarhealth-dev-file-form --prefix prefixValue

AWS – Elasticache

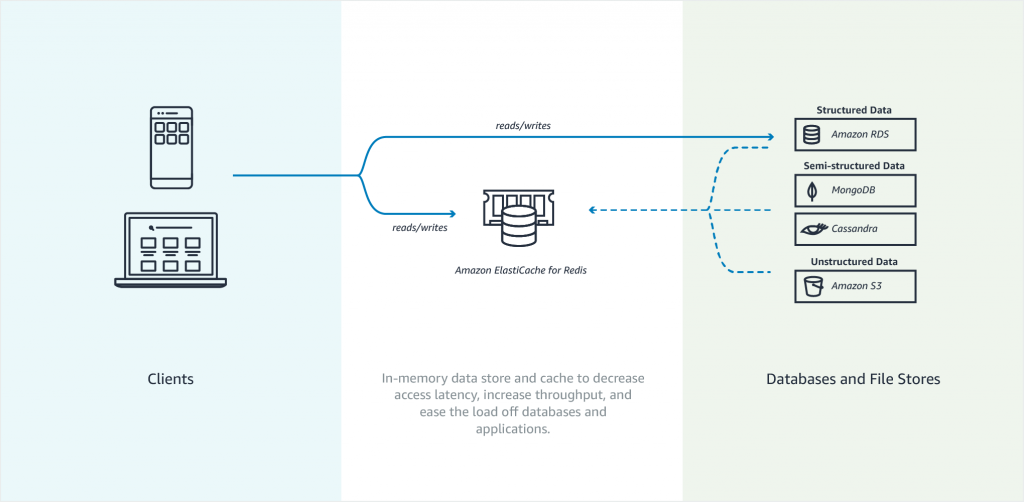

Amazon Elasticache is an in-memory data store. It also works as a cache data store to support the most demanding applications requiring sub-millisecond response times. You no longer need to perform management tasks such as hardware provisioning, software patching, setup, configuration, monitoring, failure recovery, and backups. ElastiCache continuously monitors your clusters to keep your workloads up and running so that you can focus on higher-value application development.

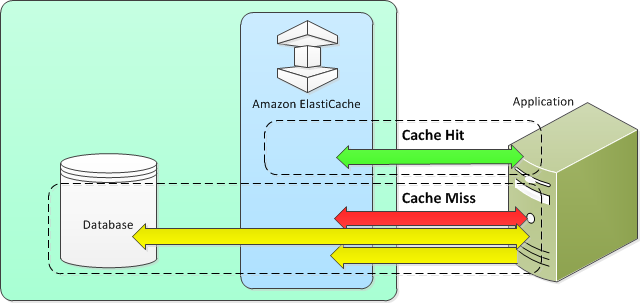

Elasticache sits between your application and your database. When your application needs to query the database, it first checks with Elasticache, if the data is there then it will return that data. if the data is not in Elasticache then it will query the database. This increases the performance of your application significantly.

Amazon Elasticache Redis

Features to enhance reliability

- Automatic detection and recovery from cache node failures.

- Multi-AZ with automatic failover of a failed primary cluster to a read replica in Redis clusters that support replication.

- Redis (cluster mode enabled) supports partitioning your data across up to 90 shards.

- Starting with Redis version 3.2.6, all subsequent supported versions support in-transit and at-rest encryption with authentication so you can build HIPAA-compliant applications.

- Flexible Availability Zone placement of nodes and clusters for increased fault tolerance.

- Integration with other AWS services such as Amazon EC2, Amazon CloudWatch, AWS CloudTrail, and Amazon SNS to provide a secure, high-performance, managed in-memory caching solution.

Use cases of when to use Elasticache

- Selling tickets to an event

- Selling products that are hot on the market

- Generating Ads

- Loading web pages that need to be loaded faster. Users tend to opt-out of the slower site in favor of the faster site. Webpage Load Time Is Related to Visitor Loss, revealed that for every 100-ms (1/10 second) increase in load time, sales decrease 1 percent. If someone wants data, whether, for a webpage or a report that drives business decisions, you can deliver that data much faster if it’s cached.

What should I cache?

- Speed and Expense – It’s always slower and more expensive to acquire data from a database than from a cache. Some database queries are inherently slower and more expensive than others. For example, queries that perform joins on multiple tables are significantly slower and more expensive than simple, single table queries. If the interesting data requires a slow and expensive query to acquire, it’s a candidate for caching. If acquiring the data requires a relatively quick and simple query, it might still be a candidate for caching, depending on other factors.

- Data and Access Pattern – Determining what to cache also involves understanding the data itself and its access patterns. For example, it doesn’t make sense to cache data that is rapidly changing or is seldom accessed. For caching to provide a meaningful benefit, the data should be relatively static and frequently accessed, such as a personal profile on a social media site. Conversely, you don’t want to cache data if caching it provides no speed or cost advantage. For example, it doesn’t make sense to cache webpages that return the results of a search because such queries and results are almost always unique.

- Staleness – By definition, cached data is stale data—even if in certain circumstances it isn’t stale, it should always be considered and treated as stale. In determining whether your data is a candidate for caching, you need to determine your application’s tolerance for stale data. Your application might be able to tolerate stale data in one context, but not another. For example, when serving a publicly-traded stock price on a website, staleness might be acceptable, with a disclaimer that prices might be up to n minutes delayed. But when serving up the price for the same stock to a broker making a sale or purchase, you want real-time data.

Consider caching your data if the following is true: It is slow or expensive to acquire when compared to cache retrieval. It is accessed with sufficient frequency. It is relatively static, or if rapidly changing, staleness is not a significant issue.

- Nodes – A node is a fixed-size chunk of secure, network-attached RAM. Each node runs an instance of the engine and version that was chosen when you created your cluster. If necessary, you can scale the nodes in a cluster up or down to a different instance type.You can purchase nodes on a pay-as-you-go basis, where you only pay for your use of a node. Or you can purchase reserved nodes at a significantly reduced hourly rate. If your usage rate is high, purchasing reserved nodes can save you money.

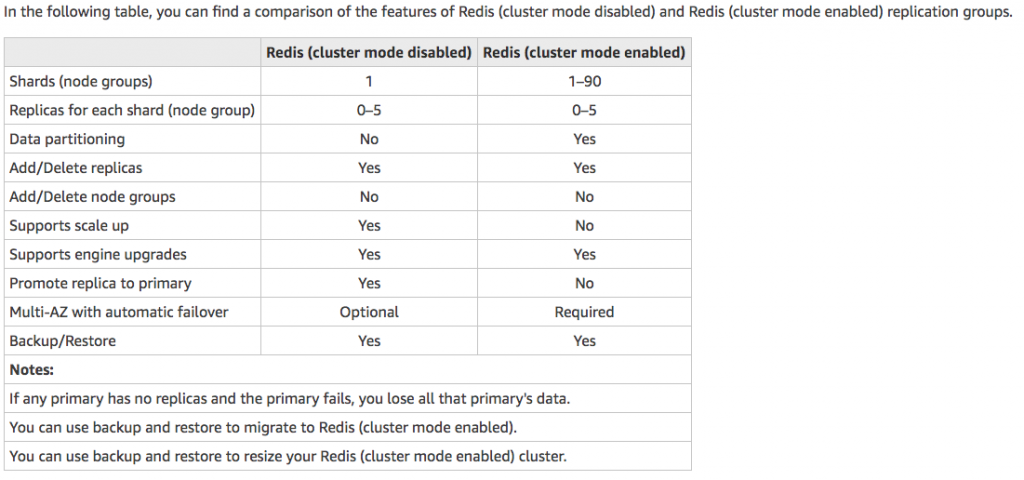

- Shards – is a group of one to six related nodes. A Redis cluster always has one shard but can have 1-90 shards. One of the nodes in a shard is a read/write primary node. Others are replica nodes.

- Clusters – a Redis cluster is a logical group of one or more shards. For improved fault tolerance, we recommend having at least two nodes in a Redis cluster and enabling Multi-AZ with automatic failover. If your application is read-intensive, we recommend adding read-only replicas Redis (cluster mode disabled) cluster so you can spread the reads across a more appropriate number of nodes. ElastiCache supports changing a Redis (cluster mode disabled) cluster’s node type to a larger node type dynamically.

- Replica – Replication is implemented by grouping from two to six nodes in a shard. One of these nodes is the read/write primary node. All the other nodes are read-only replica nodes. Each replica node maintains a copy of the data from the primary node. Replica nodes use asynchronous replication mechanisms to keep synchronized with the primary node. Applications can read from any node in the cluster but can write only to primary nodes. Read replicas enhance scalability by spreading reads across multiple endpoints. Read replicas also improve fault tolerance by maintaining multiple copies of the data. Locating read replicas in multiple Availability Zones further improves fault tolerance.

- Endpoints – An endpoint is a unique address your application uses to connect to an ElastiCache node or cluster. Single Node Redis has a single endpoint for both reads and writes. Multi-Node Redis has two separate endpoints. One for reads and one(primary) for writes. If cluster mode is enabled then you will have a single endpoint through which writes are done and also reads.

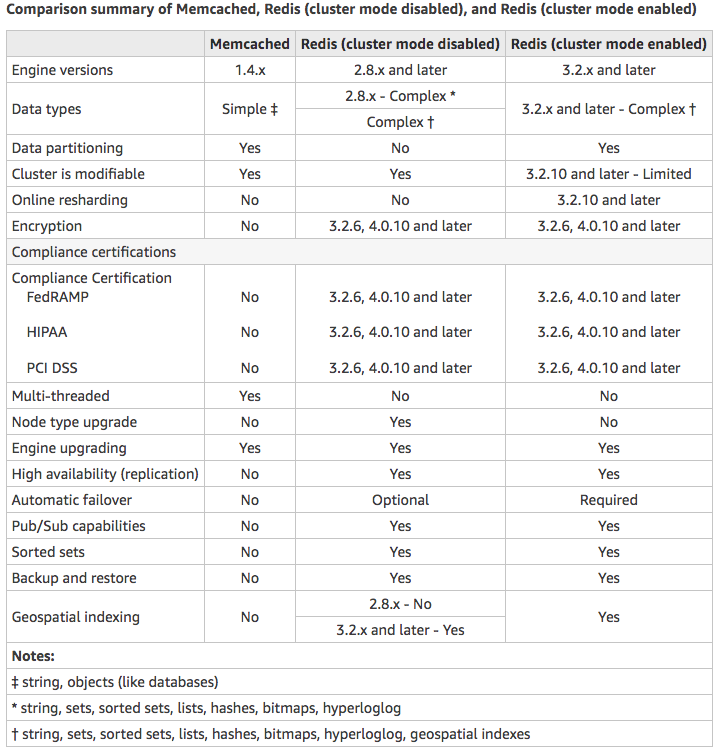

Choose Memcached if:

- You need the simplest model possible.

- You need to run large nodes with multiple cores or threads.

- You need the ability to scale out and in, adding and removing nodes as demand on your system increases and decreases.

- You need to cache objects, such as a database.

Choose Redis if:

- You need In-transit encryption.

- You need At-rest encryption.

- You need HIPAA compliance.

- You need to partition your data across two to 90 node groups

- You don’t need to scale-up to larger node types.

- You don’t need to change the number of replicas in a node group (partition).

- You need complex data types, such as strings, hashes, lists, sets, sorted sets, and bitmaps.

- You need to sort or rank in-memory datasets.

- You need persistence of your key store.

- You need to replicate your data from the primary to one or more read replicas for read-intensive applications.

- You need automatic failover if your primary node fails.

- You need publish and subscribe (pub/sub) capabilities—to inform clients about events on the server.

- You need backup and restore capabilities.

- You need to support multiple databases.

Caching Strategies

Lazy Loading –Lazy loading is a caching strategy that loads data into the cache only when necessary. How it works is that ElastiCache is an in-memory key/value store that sits between your application and the data store (database) that it accesses. Whenever your application requests data, it first makes the request to the ElastiCache cache. If the data exists in the cache and is current, ElastiCache returns the data to your application. If the data does not exist in the cache, or the data in the cache has expired, your application requests the data from your data store which returns the data to your application. Your application then writes the data received from the store to the cache so it can be more quickly retrieved next time it is requested.

Advantages of Lazy Loading

- Only requested data is cached. Since most data is never requested, lazy loading avoids filling up the cache with data that isn’t requested.

- Node failures are not fatal. When a node fails and is replaced by a new, empty node the application continues to function, though with increased latency. As requests are made to the new node each cache miss results in a query of the database and adding the data copy to the cache so that subsequent requests are retrieved from the cache.

Disadvantages of Lazy Loading

- There is a cache miss penalty – Each cache miss results in 3 trips, Initial request for data from the cache, Query of the database for the data, Writing the data to the cache. This can be a noticeable delay in data getting to the application.

- Stale data – If data is only written to the cache when there is a cache miss, data in the cache can become stale since there are no updates to the cache when data is changed in the database.

Write Through – The write-through strategy adds data or updates data in the cache whenever data is written to the database.

Advantages of Write Through

- Data in the cache is never stale. Since the data in the cache is updated every time it is written to the database, the data in the cache is always current.

- Write penalty vs. Read penalty. Every write involves two trips: A write to the cache and a write to the database. This will add latency to the process. That said, end users are generally more tolerant of latency when updating data than when retrieving data. There is an inherent sense that update requires more work and thus take longer.

Disadvantages of Write Through

- Missing data. In the case of spinning up a new node, whether due to a node failure or scaling out, there is missing data which continues to be missing until it is added or updated on the database. This can be minimized by implementing Lazy Loading in conjunction with Write Through.

- Cache churn. Since most data is never read, there can be a lot of data in the cluster that is never read. This is a waste of resources. By Adding TTL you can minimize wasted space.

Adding TTL Stragegy – Lazy loading allows for stale data, but won’t fail with empty nodes. Write through ensures that data is always fresh, but may fail with empty nodes and may populate the cache with superfluous data. By adding a time to live (TTL) value to each write, we are able to enjoy the advantages of each strategy and largely avoid cluttering up the cache with superfluous data. Time to live (TTL) is an integer value that specifies the number of seconds (Redis can specify seconds or milliseconds) until the key expires. When an application attempts to read an expired key, it is treated as though the key is not found, meaning that the database is queried for the key and the cache is updated. This does not guarantee that a value is not stale, but it keeps data from getting too stale and requires that values in the cache are occasionally refreshed from the database.

Amazon ElastiCache for Memcached is a Memcached-compatible in-memory key-value store service that can be used as a cache or a data store. It delivers the performance, ease-of-use, and simplicity of Memcached. ElastiCache for Memcached is fully managed, scalable, and secure – making it an ideal candidate for use cases where frequently accessed data must be in-memory. It is a popular choice for use cases such as Web, Mobile Apps, Gaming, Ad-Tech, and E-Commerce.

Amazon ElastiCache for Memcached is a great choice for implementing an in-memory cache to decrease access latency, increase throughput, and ease the load off your relational or NoSQL database. Amazon ElastiCache can serve frequently requested items at sub-millisecond response times , and enables you to easily scale for higher loads without growing the costlier backend database layer. Database query results caching, persistent session caching, and full-page caching are all popular examples of caching with ElastiCache for Memcached.

Session stores are easy to create with Amazon ElastiCache for Memcached. Simply use the Memcached hash table, that can be distributed across multiple nodes. Scaling the session store is as easy as adding a node and updating the clients to take advantage of the new node.

Features to enhance reliability:

- Automatic detection and recovery from cache node failures.

- Automatic discovery of nodes within a cluster enabled for automatic discovery so that no changes need to be made to your application when you add or remove nodes.

- Flexible Availability Zone placement of nodes and clusters.

- Integration with other AWS services such as Amazon EC2, Amazon CloudWatch, AWS CloudTrail, and Amazon SNS to provide a secure, high-performance, managed in-memory caching solution.