AWS – DynamoDB

Dynamodb is a low latency NoSQL database like MongoDB or Cassandra. It is fully managed by AWS so you don’t have to maintain any server for your database. Literally, there are no servers to provision, patch, or manage and no software to install, maintain or operate. DynamoDB automatically scales tables up and down to adjust for capacity and maintain performance. It supports both document and key-value data models. It uses SSD storage so it is very fast and supports Multi-AZ. DynamoDB global tables replicate your data across multiple AWS Regions to give you fast, local access to data for your globally distributed applications. For use cases that require even faster access with microsecond latency, DynamoDB Accelerator (DAX) provides a fully managed in-memory cache.

As with other AWS services, DynamoDB requires a role or user to have the right privileges to access DynamoDB. Make sure you add DynamoDB full access to the role or user(access keys) your server is using. You can also use a special IAM condition to restrict users to only access their data.

DynamoDB supports ACID transactions to enable you to build business-critical applications at scale. DynamoDB encrypts all data by default and provides fine-grained identity and access control on all your tables.

DynamoDB provides both provisioned and on-demand capacity modes so that you can optimize costs by specifying capacity per workload or paying for only the resources you consume.

When to use:

a. Gaming

b. User, vehicle, and driver data stores

c. Ads technology

d. Player session history data stores

e. Inventory tracking and fulfillment

f. Shopping carts

g. User transactions

There are two ways Dynamodb supports read:

1. Eventually consistent read – When data is saved to Dynamodb, it takes about 1 second for the data to propagate across multiple availability zones. This does not guarantee that your users will see the recent data from 1 second ago.

2. Strongly consistent read – This returns the most recent or up to date data. It will detect if there is a write operation happening at the time. If there is, then it will wait to read data afterward which results in a longer but more consistent read.

DynamoDB Tables

- Tables

- Items (rows in SQL DB)

- Attributes (columns in SQL DB)

- Data can be in JSON, HTML, or XML

DynamoDB Primary Keys

1. Unique Partition Key – This key must be unique across items or rows i.e user_id. This value is input into a hash function which calculates the physical location of which data will be stored.

2. Composite Key (Partition key + Sort key) – This is useful for post or comment tables where rows belong to another entity i.e user_id as the partition key and post_timestamp for the sort key. Two or more items can have the same partition key but they must have different sort keys. These items stored physically together but sorted with their sort key.

DynamoDB Indexes

Local Secondary Index

– Can only be created when creating your table and you cannot change, remove, or add it later.

– Has the same partition as your original table.

– Has a different sort key from your original table.

– Queries based on the local secondary indexes are faster than regular queries.

Global Secondary Index

– Can add when creating your table or later.

– Has different partition and sort keys.

– Speeds up queries.

DynamoDB with Java. I am using DynamoDB wrapper.

For local development, I am using a docker image. There are other ways to install DynamoDB locally.

Run DynamoDB locally on your computer

@Bean

public AmazonDynamoDB amazonDynamoDB() {

AmazonDynamoDB client = AmazonDynamoDBClientBuilder.standard()

.withCredentials(amazonAWSCredentialsProvider())

.withEndpointConfiguration(new AwsClientBuilder.EndpointConfiguration(amazonDynamoDBEndpoint, Regions.US_WEST_2.getName()))

.build();

return client;

}

@Bean

public DynamoDBMapper dynamoDBMapper() {

return new DynamoDBMapper(amazonDynamoDB());

}

@DynamoDBTable(tableName="user")

public class User implements Serializable {

private static final long serialVersionUID = 1L;

@DynamoDBHashKey(attributeName="uuid")

@DynamoDBAttribute

private String uuid;

@DynamoDBAttribute

private String email;

@DynamoDBAttribute

private String firstName;

@DynamoDBAttribute

private String lastName;

@DynamoDBAttribute

private String phoneNumber;

@DynamoDBAttribute

private Date createdAt;

// setters and getters

}

@Repository

public class UserRepositoryImp implements UserRepository {

private Logger log = LoggerFactory.getLogger(this.getClass());

@Autowired

private AmazonDynamoDB amazonDynamoDB;

@Autowired

private DynamoDBMapper dynamoDBMapper;

@Override

public User create(User user) {

user.setUuid(RandomGeneratorUtils.getUserUuid());

user.setCreatedAt(new Date());

dynamoDBMapper.save(user);

return getById(user.getUuid());

}

@Override

public User getById(String id) {

User user = dynamoDBMapper.load(User.class, id);

return user;

}

@Override

public List<User> getAllUser() {

PaginatedScanList<User> users = dynamoDBMapper.scan(User.class, new DynamoDBScanExpression());

return (users!=null) ? users.subList(0, users.size()) : null;

}

@Override

public boolean createTable() {

// check if table has been created

try {

DescribeTableResult describeTableResult = amazonDynamoDB.describeTable("user");

if(describeTableResult.getTable()!=null){

log.debug("user table has been created already!");

return true;

}

} catch (Exception e) {

}

// table hasn't been created so start a createTableRequest

CreateTableRequest createTableRequest = dynamoDBMapper.generateCreateTableRequest(User.class);

createTableRequest.withProvisionedThroughput(new ProvisionedThroughput(5L,5L));

// create table

CreateTableResult createTableResult = amazonDynamoDB.createTable(createTableRequest);

long count = createTableResult.getTableDescription().getItemCount();

log.debug("item count={}",count);

return false;

}

}

Transaction

Here is an example of sending money(balance) from one user to another.

@Override

public boolean tranferBalance(double amount, User userA, User userB) {

final String USER_TABLE_NAME = "user";

final String USER_PARTITION_KEY = "userid";

try {

// user A

HashMap<String, AttributeValue> userAKey = new HashMap<>();

userAKey.put(USER_PARTITION_KEY, new AttributeValue(userA.getUuid()));

ConditionCheck checkUserAValid = new ConditionCheck()

.withTableName(USER_TABLE_NAME)

.withKey(userAKey)

.withConditionExpression("attribute_exists(" + USER_PARTITION_KEY + ")");

Map<String, AttributeValue> expressionAttributeValuesA = new HashMap<>();

expressionAttributeValuesA.put(":balance", new AttributeValue().withN("" + (userA.getBalance() - amount)));

Update withdrawFromA = new Update().withTableName(USER_TABLE_NAME).withKey(userAKey)

.withUpdateExpression("SET balance = :balance")

.withExpressionAttributeValues(expressionAttributeValuesA);

log.debug("user A setup!");

// user B

HashMap<String, AttributeValue> userBKey = new HashMap<>();

userAKey.put(USER_PARTITION_KEY, new AttributeValue(userB.getUuid()));

ConditionCheck checkUserBValid = new ConditionCheck()

.withTableName(USER_TABLE_NAME)

.withKey(userBKey)

.withConditionExpression("attribute_exists(" + USER_PARTITION_KEY + ")");

Map<String, AttributeValue> expressionAttributeValuesB = new HashMap<>();

expressionAttributeValuesB.put(":balance", new AttributeValue().withN("" + (userB.getBalance() + amount)));

Update depositToB = new Update().withTableName(USER_TABLE_NAME).withKey(userBKey)

.withUpdateExpression("SET balance = :balance")

.withExpressionAttributeValues(expressionAttributeValuesB);

log.debug("user B setup!");

HashMap<String, AttributeValue> withdrawItem = new HashMap<>();

withdrawItem.put(USER_PARTITION_KEY, new AttributeValue(userA.getUuid()));

withdrawItem.put("balance", new AttributeValue("100"));

// actions

Collection<TransactWriteItem> actions = Arrays.asList(

new TransactWriteItem().withConditionCheck(checkUserAValid),

new TransactWriteItem().withConditionCheck(checkUserBValid),

new TransactWriteItem().withUpdate(withdrawFromA),

new TransactWriteItem().withUpdate(depositToB));

log.debug("actions setup!");

// transaction request

TransactWriteItemsRequest withdrawTransaction = new TransactWriteItemsRequest()

.withTransactItems(actions)

.withReturnConsumedCapacity(ReturnConsumedCapacity.TOTAL);

log.debug("transaction request setup!");

// Execute the transaction and process the result.

TransactWriteItemsResult transactWriteItemsResult = amazonDynamoDB.transactWriteItems(withdrawTransaction);

log.debug("consumed capacity={}",ObjectUtils.toJson(transactWriteItemsResult.getConsumedCapacity()));

return (transactWriteItemsResult.getConsumedCapacity()!=null) ? true : false;

} catch (ResourceNotFoundException e) {

log.error("One of the table involved in the transaction is not found " + e.getMessage());

} catch (InternalServerErrorException e) {

log.error("Internal Server Error " + e.getMessage());

} catch (TransactionCanceledException e) {

log.error("Transaction Canceled " + e.getMessage());

} catch (Exception e) {

log.error("Exception, msg={}",e.getLocalizedMessage());

}

return false;

}

August 5, 2019 AWS – RDS

Amazon Relational Database Service (Amazon RDS) is a web service that makes it easier to set up, operate, and scale a relational database in the cloud. AWS RDS takes over many of the difficult or tedious management tasks of a relational database. When you use Amazon RDS, you can choose to use on-demand DB instances or reserved DB instances.

Relational Database Types

- SQL Server

- Oracle

- MySQL Server

- PostgreSQL

- Aurora

- MariaDB

What AWS does with RDS?

- When you set up an RDS instance, you get CPU, memory, storage, and IOPS, all bundled together. With Amazon RDS, these are split apart so that you can scale them independently. If you need more CPU, fewer IOPS, or more storage, you can easily allocate them.

- Amazon RDS manages backups, software patching, automatic failure detection, and recovery.

- Since AWS manages RDS instances, Amazon RDS doesn’t provide shell access to DB instances, and it restricts access to certain system procedures and tables that require advanced privileges.

- You can have automated backups performed when you need them, or manually create your own backup snapshot. You can use these backups to restore a database. The Amazon RDS restore process works reliably and efficiently.

- You can get high availability with a primary instance and a synchronous secondary instance that you can fail over to when problems occur. You can also use MySQL, MariaDB, or PostgreSQL Read Replicas to increase read scaling.

- In addition to the security in your database package, you can help control who can access your RDS databases by using AWS Identity and Access Management (IAM) to define users and permissions. You can also help protect your databases by putting them in a virtual private cloud.

- You can create and modify a DB instance by using the AWS Command Line Interface, the Amazon RDS API, or the AWS Management Console.

DB Instances

A DB instance can contain multiple user-created databases, and you can access it by using the same tools and applications that you use with a stand-alone database instance. You can create and modify a DB instance by using the AWS Command Line Interface, the Amazon RDS API, or the AWS Management Console.

You can select the DB instance that best meets your needs. If your needs change over time, you can change DB instances. DB instance storage comes in three types: Magnetic, General Purpose (SSD), and Provisioned IOPS (PIOPS). They differ in performance characteristics and price, allowing you to tailor your storage performance and cost to the needs of your database.

Security

A security group controls access to a DB instance. It does so by allowing access to IP address ranges or Amazon EC2 instances that you specify.

There are several ways that you can track the performance and health of a DB instance. You can use the free Amazon CloudWatch service to monitor the performance and health of a DB instance; performance charts are shown in the Amazon RDS console. You can subscribe to Amazon RDS events to be notified when changes occur with a DB instance, DB Snapshot, DB parameter group, or DB security group.

Get list of database instances

aws rds describe-db-instances

aws rds start-db-instance --db-instance-identifier test-instance

aws rds stop-db-instance --db-instance-identifier test-instance

aws rds reboot-db-instance --db-instance-identifier test-instance

AWS – CLI

How to set up AWS CLI on your computer

Run this command to create the default profile

aws configure

If you have multiple aws accounts then you will need to specify a profile.

aws configure [--profile profile-name]

For example:

aws configure --profile company

Then when you make a CLI call you will need to specify the profile like this, (command to login to ECR)

aws ecr get-login --profile company

Load AWS CLI parameters from a file

Sometimes it’s convenient to load a parameter value from a file instead of trying to type it all as a command line parameter value, such as when the parameter is a complex JSON string. To specify a file that contains the value, specify a file URL in the following format.

file://complete/path/to/file

// Read from a file in the current directory aws ec2 describe-instances --filters file://filter.json

AWS – Route 53

Amazon Route 53 is a highly available and scalable cloud Domain Name System (DNS) web service. It is designed to give developers and businesses an extremely reliable and cost-effective way to route end users to Internet applications by translating names like www.example.com into the numeric IP addresses like 192.0.2.1 that computers use to connect to each other. Amazon Route 53 is fully compliant with IPv6 as well.

Amazon Route 53 effectively connects user requests to infrastructure running in AWS – such as Amazon EC2 instances, Elastic Load Balancing load balancers, or Amazon S3 buckets – and can also be used to route users to infrastructure outside of AWS. You can use Amazon Route 53 to configure DNS health checks to route traffic to healthy endpoints or to independently monitor the health of your application and its endpoints. Amazon Route 53 Traffic Flow makes it easy for you to manage traffic globally through a variety of routing types, including Latency Based Routing, Geo DNS, Geoproximity, and Weighted Round Robin—all of which can be combined with DNS Failover in order to enable a variety of low-latency, fault-tolerant architectures. Using Amazon Route 53 Traffic Flow’s simple visual editor, you can easily manage how your end-users are routed to your application’s endpoints—whether in a single AWS region or distributed around the globe. Amazon Route 53 also offers Domain Name Registration – you can purchase and manage domain names such as example.com and Amazon Route 53 will automatically configure DNS settings for your domains.

August 5, 2019AWS – Load Balancer

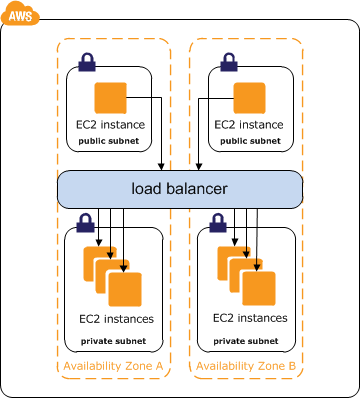

ELB automatically distributes incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, IP addresses, and Lambda functions.

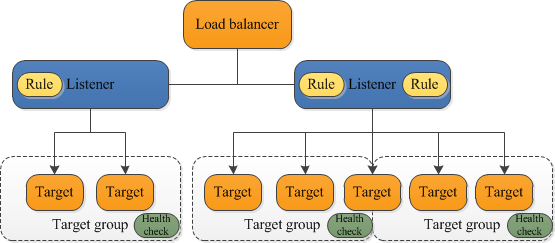

A load balancer serves as the single point of contact for clients. The load balancer distributes incoming application traffic across multiple targets, such as EC2 instances, in multiple Availability Zones. This increases the availability of your application. You add one or more listeners to your load balancer.

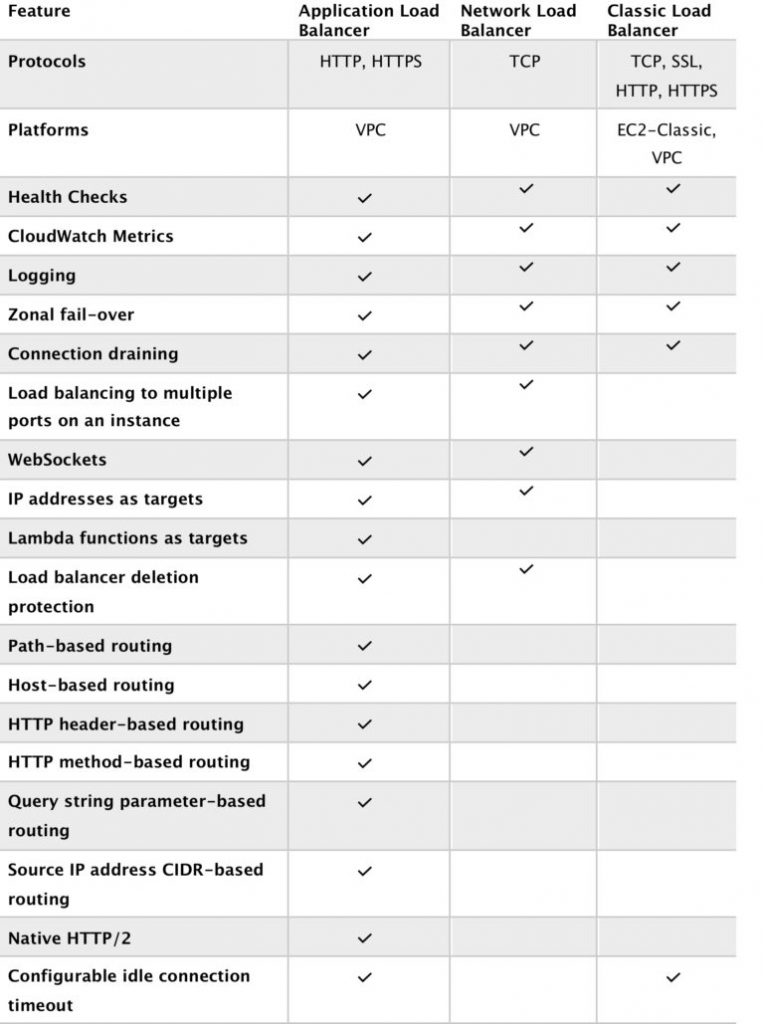

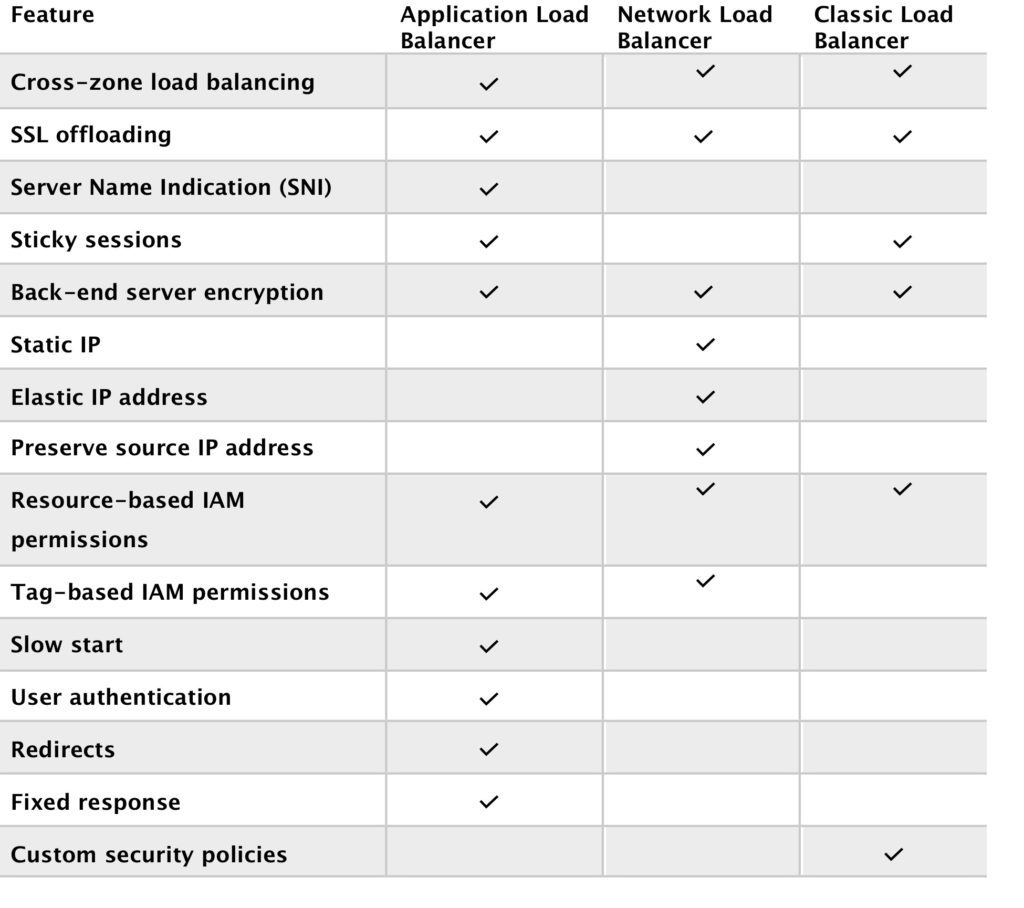

Types of Load Balancer

- Application Load Balancer – an Application Load Balancer functions at the application layer, the seventh layer of the Open Systems Interconnection (OSI) model. After the load balancer receives a request, it evaluates the listener rules in priority order to determine which rule to apply and then selects a target from the target group for the rule action. You can configure listener rules to route requests to specific webservers or different target groups based on the content of the application traffic. Routing is performed independently for each target group, even when a target is registered with multiple target groups.

- Network Load Balancer – a Network Load Balancer functions at the 4th layer of the (OSI) model. It can handle millions of requests per second. After the load balancer receives a connection request, it selects a target from the target group for the default rule. It attempts to open a TCP connection to the selected target on the port specified in the listener configuration. It is best suited for load balancing of TCP traffic where extreme performance is required.

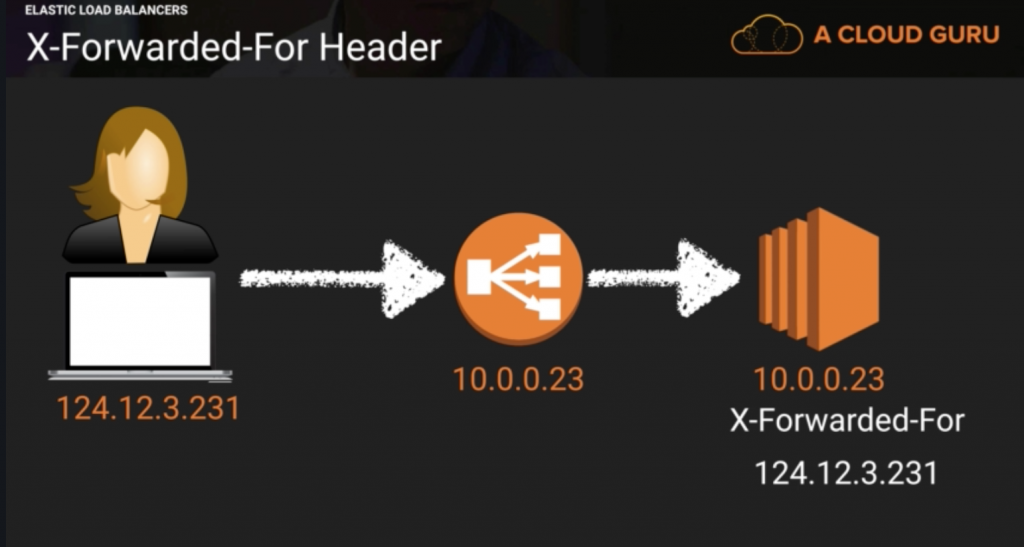

- Classic Load Balancer – a load balancer distributes incoming application traffic across multiple EC2 instances in multiple Availability Zones. This increases the fault tolerance of your applications. Elastic Load Balancing detects unhealthy instances and routes traffic only to healthy instances. It is a legacy load balancer. You can load balancer HTTP/HTTPS applications and use layer 7-specific features, such as X-forwarded and sticky sessions. You can also use strict layer 4 load balancing for applications that rely purely on the TCP protocol.

Load Balancer Benefits

- Using a load balancer increases the availability and fault tolerance of your applications. A load balancer distributes workloads across multiple compute resources, such as virtual servers.

- You can add and remove compute resources from your load balancer as your needs change, without disrupting the overall flow of requests to your applications.

- You can configure health checks, which are used to monitor the health of the compute resources so that the load balancer can send requests only to the healthy ones.

- You can also offload the work of encryption and decryption to your load balancer so that your compute resources can focus on their main work.

Load Balancer Errors

If load balancer stops working or timeouts, the classic load balancer will return 504 error.

X-Forwarded-For Header

Internet Facing ELB

- ELB nodes have public IPs

- Routes traffic to the private IP addresses of the EC2 instances

- Need one public subnet in each AZ where the ELB is defined

- ELB DNS name format: <name>-<id-number>.<region>.elb.amazonaws.com

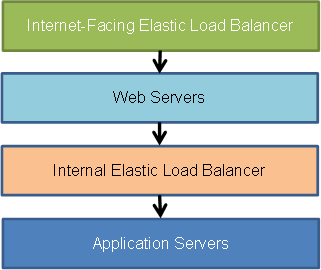

- The internet-facing load balancer has public IP addresses and the usual Elastic Load Balancer DNS name. Your web servers can use private IP addresses and restrict traffic to the requests coming from the internet-facing load balancer. The web servers, in turn, will make requests to the internal load balancer, using private IP addresses that are resolved from the internal load balancers DNS name, which begins with internal-. The internal load balancer will route requests to the application servers, which are also using private IP addresses and only accept requests from the internal load balancer.

Internal Load Balancer

- ELB nodes have private IPs

- Routes traffic to the private IP addresses of the EC2 instances

- ELB DNS name format: internal-<name>-<id-number>.<region>.elb.amazonaws.com

AWS Load Balancer Developer Guide

August 5, 2019