AWS – EC2

EC2 is an AWS web service that provides scalable and resizable compute capacity in the cloud. Using Amazon EC2 eliminates your need to invest in hardware upfront, so you can develop and deploy applications faster. You can use Amazon EC2 to launch as many or as few virtual servers as you need, configure security and networking, and manage storage. Amazon EC2 enables you to scale up or down to handle changes in requirements or spikes in popularity, reducing your need to forecast traffic.

EC2 Options

On-Demand – allows to pay a fixed rate by the hour with no upfront commitment.

Reserved – provides you with a capacity reservation and offer a discount on the hourly charge for an instance. This requires an upfront commitment.

Spot – enables you to bid whatever price you like for an instance. This is a great fit if your application is flexible with start and end time. If a sport instance is terminated by AWS you will be charged for a partial hour of usage. However, if you terminate it yourself then you will be charged the full hour.

Dedicated Hosts – physical servers dedicated for your use. This can help you reduce cost as you will use your own software license.

EC2 Instance Types – FIGHT MC PIX

F – FPGA (Field Programmable Gate Array)

I – IOPS (High-Speed Storage)

G – Graphics Intensive

H – High disk throughput

D – Density (Dense Storage)

R – RAM

M – Main choice for general-purpose apps

C – Compute (Compute Optimize)

P – Graphics (think pics) (General Purpose GPU)

X – Extreme Memory

EBS

Amazon EBS allows you to create storage volumes and attach them to EC2 instances. Once attached, you can create a file system on top of these volumes such as installing a database. EBS volumes are placed in multiple AZ to protect you from data loss.

- General Purpose SSD – General purpose SSD volume that balances price and performance for a wide variety of workloads.

- Provisioned IOPS SSD – Highest-performance SSD volume for mission-critical low-latency or high-throughput workloads. It is designed for high-intensity applications such as SQL or NoSQL database servers.

- Throughput Optimized HDD – Low-cost HDD volume designed for frequently accessed, throughput-intensive workloads. It can handle big data and data warehousing. It can’t be a boot volume.

- Cold HDD – Lowest cost HDD volume designed for less frequently accessed workloads. It can’t be a boot volume. It can be a file server.

- Magnetic – Lowest cost per gigabyte of all EBS types that is bootable. Magnetic volumes are a good fit for data that is accessed infrequently.

- Use instance storage to store temporary data.

- Understand the implications of the root device type for data persistence, backup, and recovery.

- Use separate Amazon EBS volumes for the operating system versus your data. Ensure that the volume with your data persists after instance termination.

- Use the instance store available for your instance to store temporary data. Remember that the data stored in instance store is deleted when you stop or terminate your instance.

- Regularly patch, update, and secure the operating system and applications on your instance.

- Implement the least permissive rules for your security group.

- Manage access to AWS resources and APIs using identity federation, IAM users, and IAM roles. Establish credential management policies and procedures for creating, distributing, rotating, and revoking AWS access credentials.

- View your current limits for Amazon EC2. Plan to request any limit increases in advance of the time that you’ll need them.

- Ensure that you are prepared to handle failover. For a basic solution, you can manually attach a network interface or Elastic IP address to a replacement instance.

- Regularly test the process of recovering your instances and Amazon EBS volumes if they fail.

- Deploy critical components of your application across multiple Availability Zones, and replicate your data appropriately.

- Regularly back up your EBS volumes.

AWS – IAM

IAM stands for Identity and Access Management, is Amazon web service that manages your users and their access to your AWS resources. You use IAM to control who is authenticated and authorized to use AWS resources such as EC2 servers, SQS queues, or Route53. It is so important for you to know what IAM is and how it works if you are managing your company’s AWS infrastructure.

IAM role is universal which means that you have one IAM web service for all regions.

The “root account” is the account that you set up when you sign up with AWS. This account has admin access to your AWS resources. As a best practice, do not use your root user credentials for your daily work. Instead, create IAM entities (users and roles) for your daily work. It is highly recommended that you do not share your root user credentials with anyone because doing so gives them unrestricted access to your account. It is not possible to restrict the permissions that are granted to the root user.

It is highly recommended that you create an IAM user for yourself and then assign yourself administrative permissions for your account. You can then sign in as that user and add more users as needed. Also set up multifactor authentication for you and for all the users within your AWS account. This will add another layer of security to your AWS environment.

What does IAM do?

- Authenticates and authorizes your users (users and roles) to perform actions on AWS resources (biggest task).

- Centralize control of your AWS account.

- Share access to your account.

- Granular permissions.

- Multi-Factor authentication for users.

- Rotate user password.

Secret and Access keys

You only see the secret and access keys once, when creating a new user or when generating new keys, so save them in a secure location.

You use secret and access keys to make requests to AWS APIs from your code or from CLI.

You don’t use your secret and access keys to log in to your AWS console.

Users – a user is an entity that you create in AWS to represent the person or application that uses it to interact with AWS. A user in AWS consists of a name and credentials. Think of this as people who have access to your AWS account. An IAM user doesn’t have to represent an actual person; you can create an IAM user in order to generate an access key for an application that runs in your corporate network and needs AWS access.

Groups – a collection of users under a set of permissions.

Roles – a role specifies a set of permissions that you can use to access AWS resources that you need. It is like a user. You mostly use roles when you are already in one AWS resource and you want to use another AWS resource.

Policies – a policy is an object (document) in AWS that, when associated with an identity or resource, defines their permissions. AWS evaluates these policies when a principal entity (user or role) makes a request. Permissions in the policies determine whether the request is allowed or denied.

Principals – a person or application that uses the AWS account root user, an IAM user, or an IAM role to sign in and make requests to AWS.

Authentication – to authenticate from the console as a root user, you must sign in with your email address and password. As an IAM user, provide your account ID or alias, and then your user name and password. To authenticate from the API or AWS CLI, you must provide your access key and secret key. You might also be required to provide additional security information.

Authorization – you must also be authorized (allowed) to complete your request. During authorization, AWS uses values from the request context to check for policies that apply to the request. It then uses the policies to determine whether to allow or deny the request.

Resource – an AWS resource is an object that exists within a service. Examples include an Amazon EC2 instance, an IAM user, and an Amazon S3 bucket.

Policy – 3 types of IAM policies

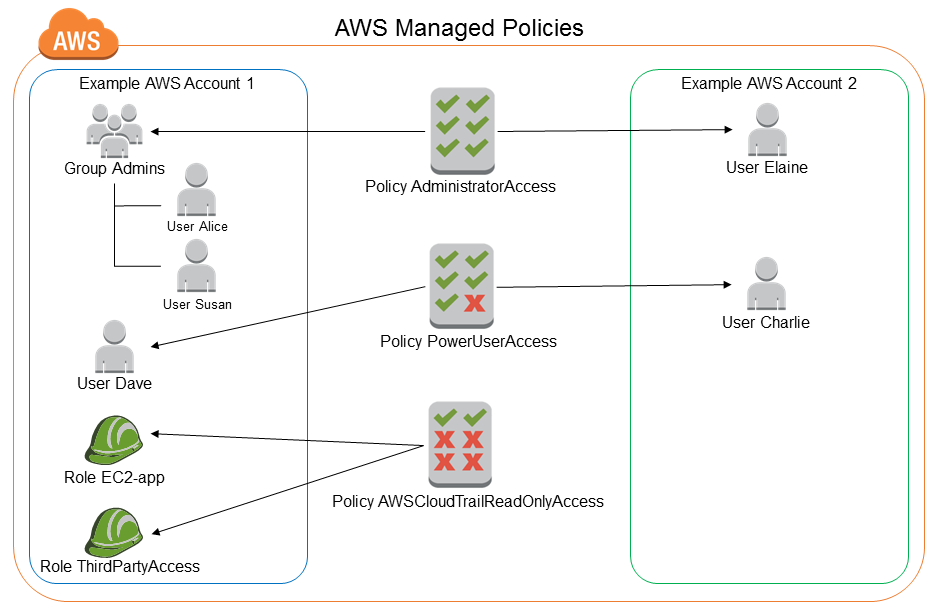

AWS Managed Policy – an AWS policy is created and administered by AWS and can’t be changed by users. AWS managed policies are designed to provide permissions for many common use cases. Full access AWS managed policies such as AmazonDynamoDBFullAccess and IAMFullAccess define permissions for service administrators by granting full access (Create, Read, Update, Deleted) to a service.

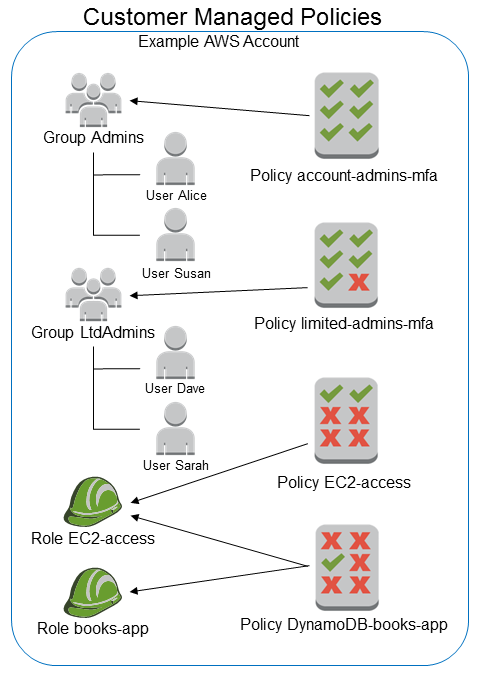

Customer Managed Policy – Customer managed policies are policies that you create and that you can attach to multiple users, groups, or roles in your AWS account. You have complete control over these policies. A great way to create a customer-managed policy is to start by copying an existing AWS managed policy. That way you know that the policy is correct at the beginning and all you need to do is customize it to your environment.

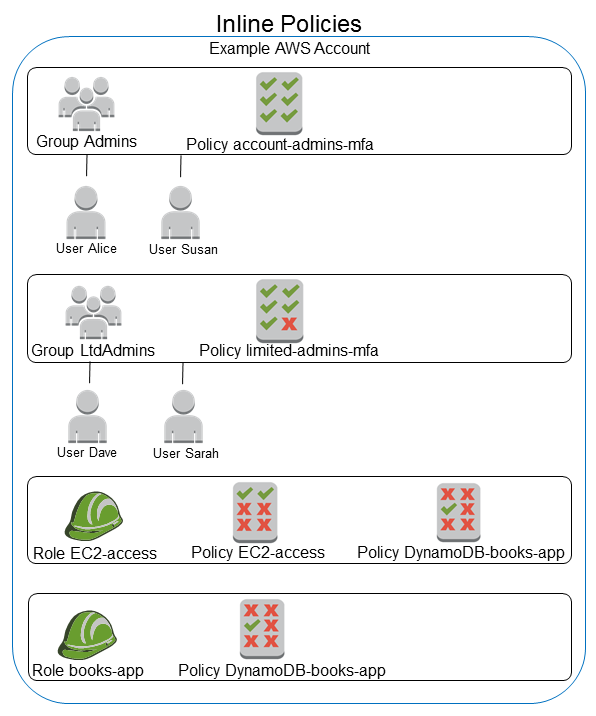

Inline Policy – an inline policy is a policy that’s embedded in a principal entity (a user, group, or role)—that is, the policy is an inherent part of the principal entity. Once you delete the entity the inline policy goes with it.

- Throw away your root account access keys. In 99% of cases, you don’t need them. I have not found a reason to use root user access keys.

- Create individual users for whoever needs access to your AWS environment. Don’t share credentials especially root account console login and access keys.

- Use groups to give users permission like backend-dev for backend developers, frontend-dev for frontend developers, data for data developers, or dev-ops.

- Grant the least privilege. When you create IAM policies, grant only the permissions required to perform a task. Determine what users (and roles) need to do and then craft policies that allow them to perform only those tasks.

- Use an AWS managed policy before creating a customer-managed policy.

- Use a customer-managed policy before creating an inline policy.

Array Parallel Sort

The sorting algorithm is a parallel sort-merge that breaks the array into sub-arrays that are themselves sorted and then merged. When the sub-array length reaches a minimum granularity, the sub-array is sorted using the appropriate Arrays.sort method. If the length of the specified array is less than the minimum granularity, then it is sorted using the appropriate Arrays.sort method. The algorithm requires a working space no greater than the size of the original array. The ForkJoin common pool is used to execute any parallel tasks.

public class ParallelArraySort {

public static void main(String[] args) {

int[] intArray = {73, 1, 14, 2, 15, 12, 7, 45};

System.out.println("---Before Parallel Sort---");

for(int i : intArray)

System.out.print(i+" ");

//Parallel Sorting

Arrays.parallelSort(intArray);

System.out.println("\n---After Parallel Sort---");

for(int i : intArray)

System.out.print(i+" ");

}

}

Result:

---Before Parallel Sort--- 73 1 14 2 15 12 7 45 ---After Parallel Sort--- 1 2 7 12 14 15 45 73

Let’s check how efficient parallel sort compared to sequential sort.

private static void timeSort() {

int[] arraySizes = { 10000, 100000, 1000000, 10000000 };

for (int arraySize : arraySizes) {

System.out.println("When Array size = " + arraySize);

int[] intArray = new int[arraySize];

Random random = new Random();

for (int i = 0; i < arraySize; i++)

intArray[i] = random.nextInt(arraySize) + random.nextInt(arraySize);

int[] forSequential = Arrays.copyOf(intArray, intArray.length);

int[] forParallel = Arrays.copyOf(intArray, intArray.length);

long startTime = System.currentTimeMillis();

Arrays.sort(forSequential);

long endTime = System.currentTimeMillis();

System.out.println("Sequential Sort Milli seconds: " + (endTime - startTime));

startTime = System.currentTimeMillis();

Arrays.parallelSort(forParallel);

endTime = System.currentTimeMillis();

System.out.println("Parallel Sort Milli seconds: " + (endTime - startTime));

System.out.println("------------------------------");

}

}

Result:

When Array size = 10000 Sequential Sort Milli seconds: 3 Parallel Sort Milli seconds: 6 ------------------------------ When Array size = 100000 Sequential Sort Milli seconds: 11 Parallel Sort Milli seconds: 27 ------------------------------ When Array size = 1000000 Sequential Sort Milli seconds: 148 Parallel Sort Milli seconds: 28 ------------------------------ When Array size = 10000000 Sequential Sort Milli seconds: 873 Parallel Sort Milli seconds: 215 ------------------------------

As you can see, when the array size is small sequential sorting performs better but when the array size is large parallel sorting performs better. So figure out which sorting algorithm to use you must know the size of the array and determine whether such size is small or large for your use case.

Also, you can sort an array partially meaning you can sort an array from a certain position to another certain position.

private static void sortPartial() {

int[] intArray = { 18, 1, 14, 2, 15, 12, 5, 4 };

System.out.println("Array Length " + intArray.length);

System.out.println("---Before Parallel Sort---");

for (int i : intArray)

System.out.print(i + " ");

// Parallel Sorting

Arrays.parallelSort(intArray, 0, 4);

System.out.println("\n---After Parallel Sort---");

for (int i : intArray)

System.out.print(i + " ");

}

Result:

Array Length 8 ---Before Parallel Sort--- 18 1 14 2 15 12 5 4 ---After Parallel Sort--- 1 2 14 18 15 12 5 4

Date Time API

Issues with Old Date API

- Thread Safety – The Date and Calendar classes are not thread safe, leaving developers to deal with the headache of hard to debug concurrency issues and to write additional code to handle thread safety. On the contrary the new Date and Time APIs introduced in Java 8 are immutable and thread safe, thus taking that concurrency headache away from developers.

- APIs Design and Ease of Understanding – The Date and Calendar APIs are poorly designed with inadequate methods to perform day-to-day operations. The new Date/Time APIs is ISO centric and follows consistent domain models for date, time, duration and periods. There are a wide variety of utility methods that support the commonest operations.

- ZonedDate and Time – Developers had to write additional logic to handle timezone logic with the old APIs, whereas with the new APIs, handling of timezone can be done withLocal and ZonedDate/Time APIs.

Date and Time official documentation.

LocalDate is an immutable date-time object that represents a date, often viewed as year-month-day. Other date fields, such as day-of-year, day-of-week and week-of-year, can also be accessed. For example, the value “2nd October 2007” can be stored in a LocalDate. This class does not store or represent a time or time-zone.

How to create a LocalDate

// use now() method

LocalDate now = LocalDate.now(ZoneId.systemDefault());//2019-09-10

// use of() method

LocalDate date1 = LocalDate.of(2019, 6, 20);//2019-06-20

// use parse() method

LocalDate date2 = LocalDate.parse("2016-08-16").plusDays(2);//2016-08-18

How to manipulate LocalDate

// add days LocalDate date2 = LocalDate.now().plusDays(2);//2016-08-18 // minus date2 = date2.minus(1, ChronoUnit.MONTHS);//2016-07-18 // plus //date2 = date2.plus(3, ChronoUnit.MONTHS);//2016-10-18

LocalDateTime is an immutable date-time object that represents a date-time, often viewed as year-month-day-hour-minute-second. Other date and time fields, such as day-of-year, day-of-week, and week-of-year, can also be accessed. Time is represented to nanosecond precision. For example, the value “2nd October 2007 at 13:45.30.123456789” can be stored in a LocalDateTime. This class does not store or represent a time-zone.

How to create a LocalDateTime

// use now() method LocalDateTime now = LocalDateTime.now(ZoneId.systemDefault()); // use of() method LocalDateTime date1 = LocalDateTime.of(2019, 6, 20, 0, 0).plusDays(2);// 2019-06-22T00:00

How to manipulate LocalDateTime

// add days LocalDateTime date1 = LocalDateTime.of(2019, 6, 20, 0, 0).plusDays(2);//2019-06-22T00:00 // minus date1 = date1.minus(1, ChronoUnit.MONTHS);//2019-05-22T00:00 // plus date1 = date1.plus(3, ChronoUnit.MONTHS);//2019-08-22T00:00

LocalTime is an immutable date-time object that represents a time, often viewed as hour-minute-second. Time is represented to nanosecond precision. For example, the value “13:45.30.123456789” can be stored in a LocalTime.

LocalTime now = LocalTime.now(Clock.systemDefaultZone());

System.out.println("now = " + now);

LocalTime time1 = LocalTime.of(12, 6).plusHours(2);

System.out.println("time1 = " + time1);

time1 = time1.minus(1, ChronoUnit.MINUTES);

System.out.println("time1 = " + time1);

time1 = time1.plus(3, ChronoUnit.HOURS);

System.out.println("time1 = " + time1);

Optional

Optional is a container object that may or may not contain a value. You can check if a value is present by calling the isPresent() method. If isPresent() returns true you can use the get() method to return the value.

Null pointer exception is a real problem in programming and that we must handle it with care.

How to create an Optional

String name = "Folau"; // name can't be null or else you will get a NullPointerException Optional<String> opt = Optional.of(name);

If we expect null values, we can use the ofNullable method which does not throw a NullPointerException

String name = "Folau"; Optional<String> opt = Optional.ofNullable(name); String name = null; // this won't throw a NullPointerException Optional<String> opt = Optional.ofNullable(name);

Check if Optional is empty or not

The Optional.isPresent() method is used to check if an Optional has a value or not. It returns true if the Optional has value otherwise it returns false.

String name = "Folau";

Optional<String> opt = Optional.ofNullable(name);

if (opt.isPresent()) {

System.out.println("Value available.");

} else {

System.out.println("Value not available.");

}

The Optional.ifPresent() method is used to execute a lambda function (on the value) if Optional is not empty. if Optional is empty, do nothing.

opt.ifPresent(n -> {

System.out.println(n);

});

Optional.orElse(T other) returns the value if present, otherwise return other of same data type

Optional.orElseGet(Supplier T) returns the value if present, otherwise invoke T and return the result of that call.

//when using orElse(), whether the wrapped value is present or not, the default object is created.

//So in this case, we have just created one redundant object that is never used.

String n = opt.orElse(getRealName());

System.out.println(n);

String na = opt.orElseGet(() -> getRealName());

System.out.println(na);

....

private String getRealName() {

System.out.println("getRealName()");

return "Lisa";

}

// Run program result

getRealName()

Folau

Folau

Optional.filter(Predicate T) – If a value is present, and the value matches the given predicate, return an Optional describing the value, otherwise return an empty Optional.

Optional<Integer> ageOpt = Optional.ofNullable(20);

boolean underAgeForBeer = ageOpt.filter(age -> {

return (age >= 21);

}).isPresent();

System.out.println("underAgeForBeer = " + underAgeForBeer);

Optional.map(Function mapper) – If a value is present, apply the provided mapping function to it, and if the result is non-null, return an Optional describing the result. Otherwise return an empty Optional.

Optional<String> optFileName = Optional.ofNullable("file.pdf");

Optional<File> optFile = optFileName.map(fileName -> new File(fileName));