AWS – Elasticache

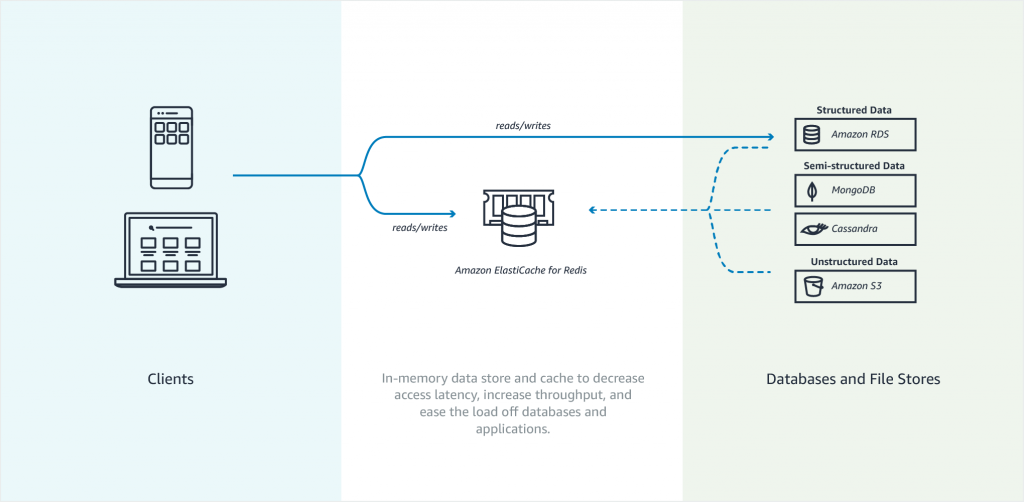

Amazon Elasticache is an in-memory data store. It also works as a cache data store to support the most demanding applications requiring sub-millisecond response times. You no longer need to perform management tasks such as hardware provisioning, software patching, setup, configuration, monitoring, failure recovery, and backups. ElastiCache continuously monitors your clusters to keep your workloads up and running so that you can focus on higher-value application development.

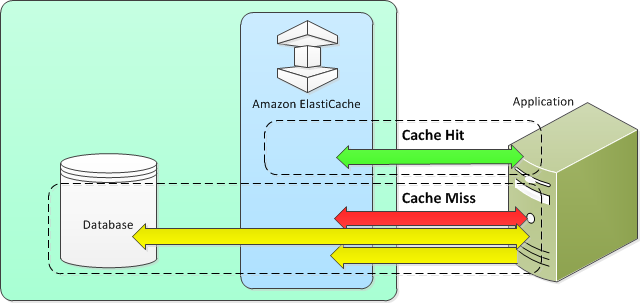

Elasticache sits between your application and your database. When your application needs to query the database, it first checks with Elasticache, if the data is there then it will return that data. if the data is not in Elasticache then it will query the database. This increases the performance of your application significantly.

Amazon Elasticache Redis

Features to enhance reliability

- Automatic detection and recovery from cache node failures.

- Multi-AZ with automatic failover of a failed primary cluster to a read replica in Redis clusters that support replication.

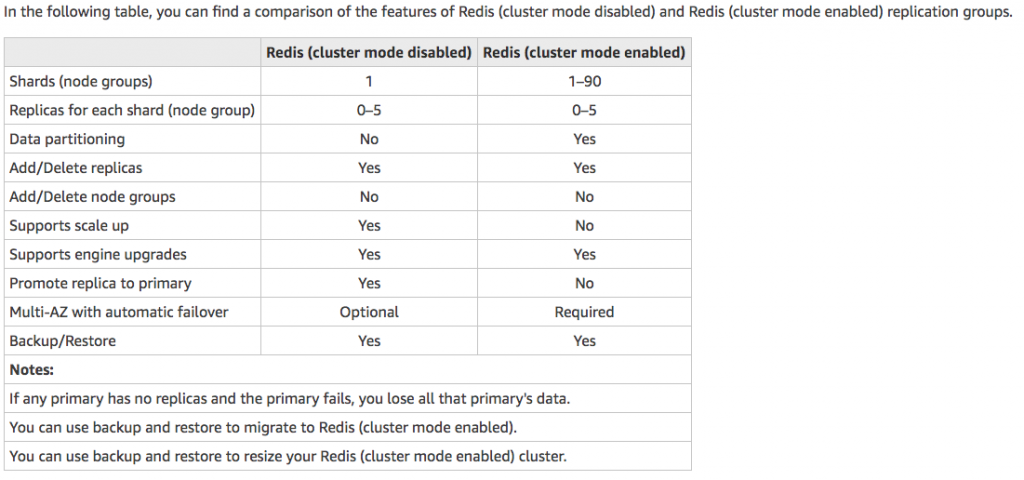

- Redis (cluster mode enabled) supports partitioning your data across up to 90 shards.

- Starting with Redis version 3.2.6, all subsequent supported versions support in-transit and at-rest encryption with authentication so you can build HIPAA-compliant applications.

- Flexible Availability Zone placement of nodes and clusters for increased fault tolerance.

- Integration with other AWS services such as Amazon EC2, Amazon CloudWatch, AWS CloudTrail, and Amazon SNS to provide a secure, high-performance, managed in-memory caching solution.

Use cases of when to use Elasticache

- Selling tickets to an event

- Selling products that are hot on the market

- Generating Ads

- Loading web pages that need to be loaded faster. Users tend to opt-out of the slower site in favor of the faster site. Webpage Load Time Is Related to Visitor Loss, revealed that for every 100-ms (1/10 second) increase in load time, sales decrease 1 percent. If someone wants data, whether, for a webpage or a report that drives business decisions, you can deliver that data much faster if it’s cached.

What should I cache?

- Speed and Expense – It’s always slower and more expensive to acquire data from a database than from a cache. Some database queries are inherently slower and more expensive than others. For example, queries that perform joins on multiple tables are significantly slower and more expensive than simple, single table queries. If the interesting data requires a slow and expensive query to acquire, it’s a candidate for caching. If acquiring the data requires a relatively quick and simple query, it might still be a candidate for caching, depending on other factors.

- Data and Access Pattern – Determining what to cache also involves understanding the data itself and its access patterns. For example, it doesn’t make sense to cache data that is rapidly changing or is seldom accessed. For caching to provide a meaningful benefit, the data should be relatively static and frequently accessed, such as a personal profile on a social media site. Conversely, you don’t want to cache data if caching it provides no speed or cost advantage. For example, it doesn’t make sense to cache webpages that return the results of a search because such queries and results are almost always unique.

- Staleness – By definition, cached data is stale data—even if in certain circumstances it isn’t stale, it should always be considered and treated as stale. In determining whether your data is a candidate for caching, you need to determine your application’s tolerance for stale data. Your application might be able to tolerate stale data in one context, but not another. For example, when serving a publicly-traded stock price on a website, staleness might be acceptable, with a disclaimer that prices might be up to n minutes delayed. But when serving up the price for the same stock to a broker making a sale or purchase, you want real-time data.

Consider caching your data if the following is true: It is slow or expensive to acquire when compared to cache retrieval. It is accessed with sufficient frequency. It is relatively static, or if rapidly changing, staleness is not a significant issue.

- Nodes – A node is a fixed-size chunk of secure, network-attached RAM. Each node runs an instance of the engine and version that was chosen when you created your cluster. If necessary, you can scale the nodes in a cluster up or down to a different instance type.You can purchase nodes on a pay-as-you-go basis, where you only pay for your use of a node. Or you can purchase reserved nodes at a significantly reduced hourly rate. If your usage rate is high, purchasing reserved nodes can save you money.

- Shards – is a group of one to six related nodes. A Redis cluster always has one shard but can have 1-90 shards. One of the nodes in a shard is a read/write primary node. Others are replica nodes.

- Clusters – a Redis cluster is a logical group of one or more shards. For improved fault tolerance, we recommend having at least two nodes in a Redis cluster and enabling Multi-AZ with automatic failover. If your application is read-intensive, we recommend adding read-only replicas Redis (cluster mode disabled) cluster so you can spread the reads across a more appropriate number of nodes. ElastiCache supports changing a Redis (cluster mode disabled) cluster’s node type to a larger node type dynamically.

- Replica – Replication is implemented by grouping from two to six nodes in a shard. One of these nodes is the read/write primary node. All the other nodes are read-only replica nodes. Each replica node maintains a copy of the data from the primary node. Replica nodes use asynchronous replication mechanisms to keep synchronized with the primary node. Applications can read from any node in the cluster but can write only to primary nodes. Read replicas enhance scalability by spreading reads across multiple endpoints. Read replicas also improve fault tolerance by maintaining multiple copies of the data. Locating read replicas in multiple Availability Zones further improves fault tolerance.

- Endpoints – An endpoint is a unique address your application uses to connect to an ElastiCache node or cluster. Single Node Redis has a single endpoint for both reads and writes. Multi-Node Redis has two separate endpoints. One for reads and one(primary) for writes. If cluster mode is enabled then you will have a single endpoint through which writes are done and also reads.

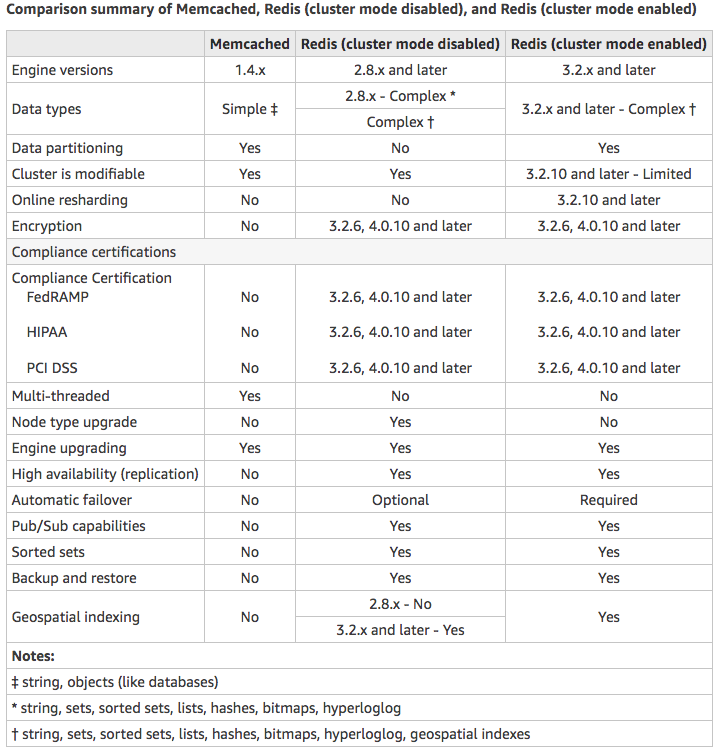

Choose Memcached if:

- You need the simplest model possible.

- You need to run large nodes with multiple cores or threads.

- You need the ability to scale out and in, adding and removing nodes as demand on your system increases and decreases.

- You need to cache objects, such as a database.

Choose Redis if:

- You need In-transit encryption.

- You need At-rest encryption.

- You need HIPAA compliance.

- You need to partition your data across two to 90 node groups

- You don’t need to scale-up to larger node types.

- You don’t need to change the number of replicas in a node group (partition).

- You need complex data types, such as strings, hashes, lists, sets, sorted sets, and bitmaps.

- You need to sort or rank in-memory datasets.

- You need persistence of your key store.

- You need to replicate your data from the primary to one or more read replicas for read-intensive applications.

- You need automatic failover if your primary node fails.

- You need publish and subscribe (pub/sub) capabilities—to inform clients about events on the server.

- You need backup and restore capabilities.

- You need to support multiple databases.

Caching Strategies

Lazy Loading –Lazy loading is a caching strategy that loads data into the cache only when necessary. How it works is that ElastiCache is an in-memory key/value store that sits between your application and the data store (database) that it accesses. Whenever your application requests data, it first makes the request to the ElastiCache cache. If the data exists in the cache and is current, ElastiCache returns the data to your application. If the data does not exist in the cache, or the data in the cache has expired, your application requests the data from your data store which returns the data to your application. Your application then writes the data received from the store to the cache so it can be more quickly retrieved next time it is requested.

Advantages of Lazy Loading

- Only requested data is cached. Since most data is never requested, lazy loading avoids filling up the cache with data that isn’t requested.

- Node failures are not fatal. When a node fails and is replaced by a new, empty node the application continues to function, though with increased latency. As requests are made to the new node each cache miss results in a query of the database and adding the data copy to the cache so that subsequent requests are retrieved from the cache.

Disadvantages of Lazy Loading

- There is a cache miss penalty – Each cache miss results in 3 trips, Initial request for data from the cache, Query of the database for the data, Writing the data to the cache. This can be a noticeable delay in data getting to the application.

- Stale data – If data is only written to the cache when there is a cache miss, data in the cache can become stale since there are no updates to the cache when data is changed in the database.

Write Through – The write-through strategy adds data or updates data in the cache whenever data is written to the database.

Advantages of Write Through

- Data in the cache is never stale. Since the data in the cache is updated every time it is written to the database, the data in the cache is always current.

- Write penalty vs. Read penalty. Every write involves two trips: A write to the cache and a write to the database. This will add latency to the process. That said, end users are generally more tolerant of latency when updating data than when retrieving data. There is an inherent sense that update requires more work and thus take longer.

Disadvantages of Write Through

- Missing data. In the case of spinning up a new node, whether due to a node failure or scaling out, there is missing data which continues to be missing until it is added or updated on the database. This can be minimized by implementing Lazy Loading in conjunction with Write Through.

- Cache churn. Since most data is never read, there can be a lot of data in the cluster that is never read. This is a waste of resources. By Adding TTL you can minimize wasted space.

Adding TTL Stragegy – Lazy loading allows for stale data, but won’t fail with empty nodes. Write through ensures that data is always fresh, but may fail with empty nodes and may populate the cache with superfluous data. By adding a time to live (TTL) value to each write, we are able to enjoy the advantages of each strategy and largely avoid cluttering up the cache with superfluous data. Time to live (TTL) is an integer value that specifies the number of seconds (Redis can specify seconds or milliseconds) until the key expires. When an application attempts to read an expired key, it is treated as though the key is not found, meaning that the database is queried for the key and the cache is updated. This does not guarantee that a value is not stale, but it keeps data from getting too stale and requires that values in the cache are occasionally refreshed from the database.

Amazon ElastiCache for Memcached is a Memcached-compatible in-memory key-value store service that can be used as a cache or a data store. It delivers the performance, ease-of-use, and simplicity of Memcached. ElastiCache for Memcached is fully managed, scalable, and secure – making it an ideal candidate for use cases where frequently accessed data must be in-memory. It is a popular choice for use cases such as Web, Mobile Apps, Gaming, Ad-Tech, and E-Commerce.

Amazon ElastiCache for Memcached is a great choice for implementing an in-memory cache to decrease access latency, increase throughput, and ease the load off your relational or NoSQL database. Amazon ElastiCache can serve frequently requested items at sub-millisecond response times , and enables you to easily scale for higher loads without growing the costlier backend database layer. Database query results caching, persistent session caching, and full-page caching are all popular examples of caching with ElastiCache for Memcached.

Session stores are easy to create with Amazon ElastiCache for Memcached. Simply use the Memcached hash table, that can be distributed across multiple nodes. Scaling the session store is as easy as adding a node and updating the clients to take advantage of the new node.

Features to enhance reliability:

- Automatic detection and recovery from cache node failures.

- Automatic discovery of nodes within a cluster enabled for automatic discovery so that no changes need to be made to your application when you add or remove nodes.

- Flexible Availability Zone placement of nodes and clusters.

- Integration with other AWS services such as Amazon EC2, Amazon CloudWatch, AWS CloudTrail, and Amazon SNS to provide a secure, high-performance, managed in-memory caching solution.