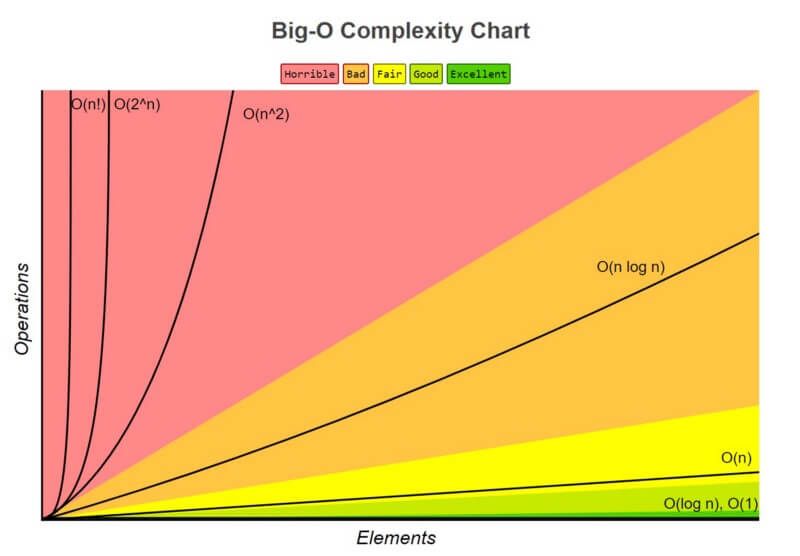

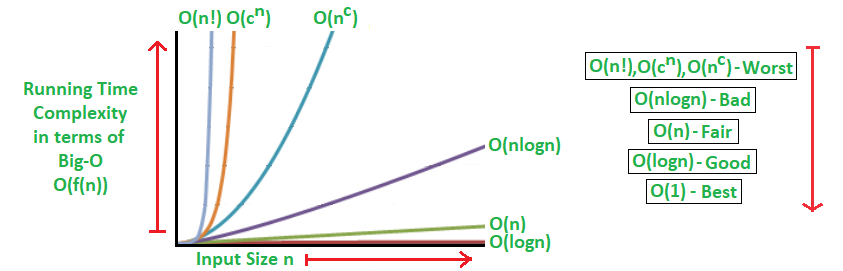

Big O Notation

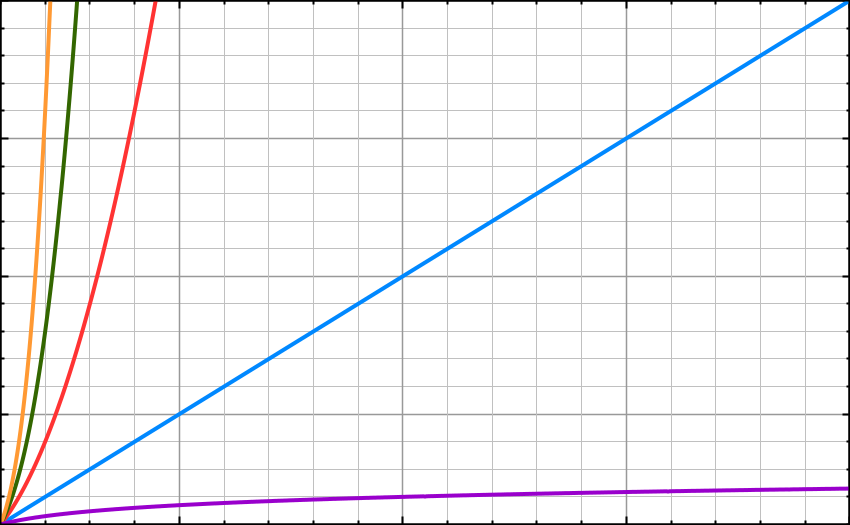

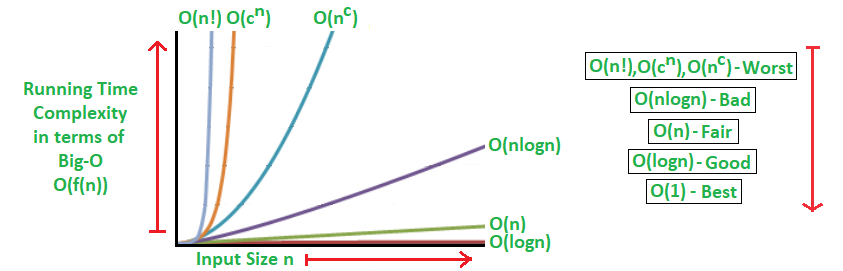

Big O is about how long an algorithm takes to run from start to end and how well it scales as the size of the input or dataset increases.

Big O is mostly measured based on the worst-case scenario even though some algorithms might finish earlier than others.

public int getFirstItem(int[] items) {

return items[0];

}

public void sendWelcomeNoteToUsers(User[] users) {

for (User user : users) {

emailService.sendNote(user);

...

}

}

public User getUserByEmail(User[] users, String email){

for (int i = 0; i < users.length; i++) {

if (users[i].equals(email)) {

return users[i];

}

}

}

public static void suspendAllUsersWithinPlans(Plan[] plans) {

for (Plan plan : plans) {

List<User> users = plan.getUsers();

for (User user : users) {

// suspend logic goes here.

}

}

}

private static void bubble_sort(int[] input, boolean ascending) {

int inputLength = input.length;

int temp;

boolean is_sorted;

for (int i = 0; i < inputLength; i++) {

is_sorted = true;

for (int j = 1; j < (inputLength - i); j++) {

if (ascending) {

if (input[j - 1] > input[j]) {

temp = input[j - 1];

input[j - 1] = input[j];

input[j] = temp;

is_sorted = false;

}

} else {

if (input[j - 1] < input[j]) {

temp = input[j - 1];

input[j - 1] = input[j];

input[j] = temp;

is_sorted = false;

}

}

}

// is sorted? then break it, avoid useless loop.

if (is_sorted) break;

}

}

public static int binarySearch(int[] elements, int target) {

int left = 0;

int right = elements.length - 1;

while (left <= right)

{

int middle = (left + right) / 2;

if (target < elements[middle])

{

right = middle - 1;

}

else if (target > elements[middle])

{

left = middle + 1;

}

else {

return middle;

}

}

return -1;

}

public static void main(String[] args){

int[] arr1 = {-20, 3, 15, 81, 432};

// test when the target is in the middle

int index = binarySearch(arr1,15);

System.out.println(index);

// test when the target is the first item in the array

index = binarySearch(arr1,-20);

System.out.println(index);

// test when the target is in the array - last

index = binarySearch(arr1,432);

System.out.println(index);

// test when the target is not in the array

index = binarySearch(arr1,53);

System.out.println(index);

}

for (int i = 1; i <= n; i++){

for(int j = 1; j < 8; j = j * 2) {

System.out.println("Hey - I'm busy looking at: " + i + " and " + j);

}

}

int k = 5;

int n = 6;

int power = (int)Math.pow(k, n);

System.out.println("power: " + power);

for (int i = 1; i <= power; i++){

System.out.println("Hey - I'm busy looking at: " + i);

}

for (int i = 1; i <= factorial(n); i++) {

System.out.println("Hey - I'm busy looking at: " + i);

}

....

public int factorial(int number) {

int fact = 1;

for (int i = 1; i <= number; i++) {

fact = fact * i;

}

return fact;

}

public static int getLargestItem(int[] items) {

int largest = Integer.MIN_VALUE;

for (int item : items) {

if (item > largest) {

largest = item;

}

}

return largest;

}

Introduction

Data Structures

A data structure is exactly what it sounds like — a structure that holds data. Unlike variables, which only hold a single point of data, data structures collect one or more points of data of the same type. Although a data structure can hold many points of data, a data structure, itself, is only a single object that can be stored in a variable like any other datatype. A data structure’s type becomes the type of the data points it holds. For that reason, a data structure is sometimes referred to as a container object, or a collection. Some data structures can expand infinitely in size, but others, like arrays have a fixed size.

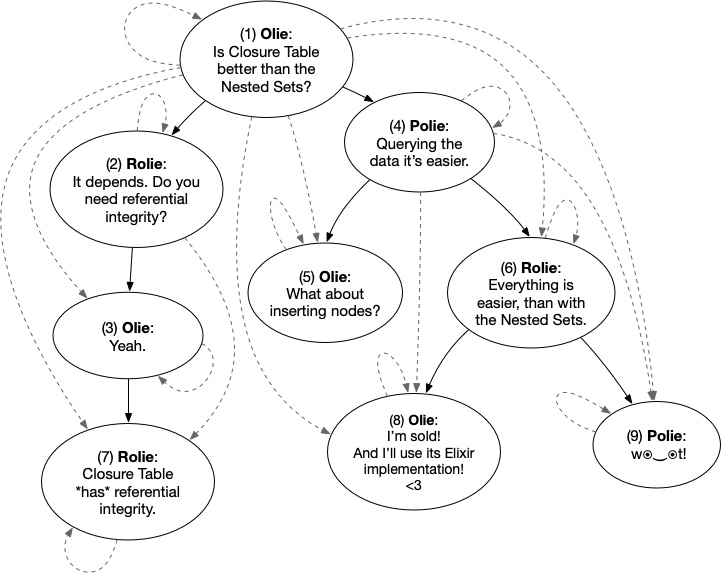

MySQL Closure Table

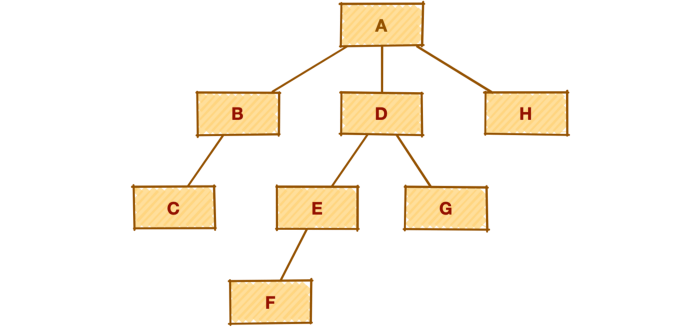

Sometimes we are given a task where we need to model a hierarchy of different complexity levels and not really sure how to do that properly in the most efficient, reliable, and flexible way. Let’s review one of the data modeling patterns that give us some answers for that.

Consider we have a hierarchy with a ragged variable depth, like on a picture below.

The requirements are that we need to:

- Query a subtree, preferably up to a certain level of depth, like direct subordinate as well as descendants up to a certain level

- Query all ancestors of the node

- Add/Remove a node to a hierarchy

- Change the layout of the hierarchy — move a subtree from one place to another (from one parent to another one)

- Manage efficiently the forest —a large number of trees, where each tree is not necessarily big in terms of number of nodes, and the maximum depth, but the number of trees could grow up to hundreds of thousands or even millions

- Manage nodes of the trees that enter short-term relationships. So there is a need to track the time period for which the actual relationships took place.

This is where a closure table comes into the picture.

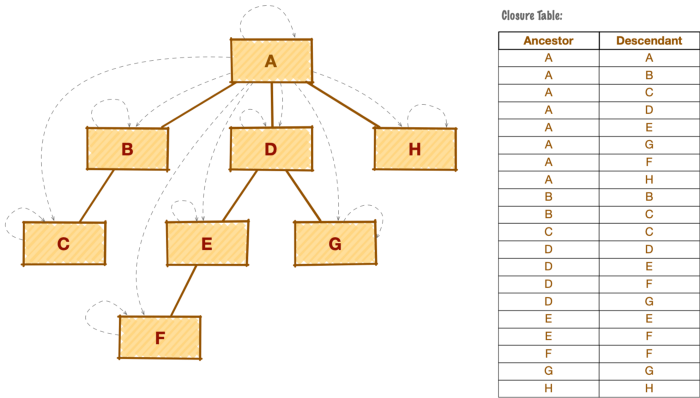

A closure table gives you the ability to work with tree structure hierarchies. It involves storing all paths through a tree, not just those with a direct parent-child relationship. For example: things like family tree(Parent – Kids – etc), company hierarchy(Owner – CEO – Manager – Employee), or file sytem(/users/folauk/Documents/test.mp4). There are other ways such as Path Enumeration and Nested Sets to keep track of this kind of information but using a closure is the simplest and most efficient way of doing it.

The main building block of the pattern is an additional structure(table) or a mapping table that keeps the tree relationships — a set of node pairs for each path from the ancestor to a descendant or parent to child, including a path to itself.

Our example here is a social media site where a user can put up a post. Other users can comment on that post and can comment on comments from the same post. The tree here is comments under comments. We have to keep track of which comments go under which comments and their parents.

CREATE TABLE `user` ( `id` bigint(20) NOT NULL AUTO_INCREMENT, `date_of_birth` date DEFAULT NULL, `email` varchar(255) DEFAULT NULL, `first_name` varchar(255) DEFAULT NULL, `gender` varchar(255) DEFAULT NULL, `last_name` varchar(255) DEFAULT NULL, `phone_number` varchar(255) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=21 DEFAULT CHARSET=latin1; CREATE TABLE `post` ( `id` bigint(20) NOT NULL AUTO_INCREMENT, `content` longtext, `created_at` datetime(6) DEFAULT NULL, `last_updated_at` datetime(6) DEFAULT NULL, `uuid` varchar(255) NOT NULL, `author_id` bigint(20) DEFAULT NULL, PRIMARY KEY (`id`), UNIQUE KEY `UK_b1a5vs6t5vi32stck5kjw0tgf` (`uuid`), KEY `FK12njtf8e0jmyb45lqfpt6ad89` (`author_id`), CONSTRAINT `FK12njtf8e0jmyb45lqfpt6ad89` FOREIGN KEY (`author_id`) REFERENCES `user` (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=41 DEFAULT CHARSET=latin1; CREATE TABLE `comment` ( `id` bigint(20) NOT NULL AUTO_INCREMENT, `content` longtext, `created_at` datetime(6) DEFAULT NULL, `last_updated_at` datetime(6) DEFAULT NULL, `author_id` bigint(20) DEFAULT NULL, `post_id` bigint(20) DEFAULT NULL, PRIMARY KEY (`id`), KEY `FKh1gtv412u19wcbx22177xbkjp` (`author_id`), KEY `FKs1slvnkuemjsq2kj4h3vhx7i1` (`post_id`), CONSTRAINT `FKh1gtv412u19wcbx22177xbkjp` FOREIGN KEY (`author_id`) REFERENCES `user` (`id`), CONSTRAINT `FKs1slvnkuemjsq2kj4h3vhx7i1` FOREIGN KEY (`post_id`) REFERENCES `post` (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=201 DEFAULT CHARSET=latin1; CREATE TABLE `comment_tree` ( `id` bigint(20) NOT NULL AUTO_INCREMENT, `depth` int(11) DEFAULT NULL, `child_id` bigint(20) DEFAULT NULL, `parent_id` bigint(20) DEFAULT NULL, PRIMARY KEY (`id`), KEY `FKhosfyxi6cpykkhyrccfxq2k2x` (`child_id`), KEY `FKq2283664y5ywd961iojgjk98b` (`parent_id`), CONSTRAINT `FKhosfyxi6cpykkhyrccfxq2k2x` FOREIGN KEY (`child_id`) REFERENCES `comment` (`id`), CONSTRAINT `FKq2283664y5ywd961iojgjk98b` FOREIGN KEY (`parent_id`) REFERENCES `comment` (`id`) ) ENGINE=InnoDB AUTO_INCREMENT=1201 DEFAULT CHARSET=latin1;

Here we have a comment table that represents our comment, author of the comment, and the post it belongs to.

Insert comments(rows) into the comment_tree(closure) table

INSERT INTO comment_tree(parent_id, child_id, depth) SELECT p.parent_id, c.child_id, p.depth+c.depth+1 FROM comment_tree as p, comment_tree as c WHERE p.child_id = :parentId and c.parent_id = :childId;

Delete comments(rows) in the comment_tree(closure) table

DELETE link

FROM comment_tree as parent, comment_tree as link, comment_tree as child

WHERE parent.parent_id = link.parent_id AND child.child_id = link.child_id

-- AND parent.child_id = {parentId} and child.parent_id = {childId}

AND parent.child_id = ? AND child.parent_id = ?

Retrieve comments under a comment.

Get all comments under comment with id 4.

SELECT com.* FROM comment AS com JOIN comment_tree AS comTree ON com.id = comTree.child_id WHERE comTree.parent_id = 4;

Retrieve parent comments of a comment

Get all parent comments of comment with id 4.

-- retrieve parent comments of comment with id 4 SELECT com.*, comTree.* FROM comment AS com JOIN comment_tree AS comTree ON com.id = comTree.parent_id WHERE comTree.child_id = 4;

Java Best Practices

#1 Proper Naming Convention

Before writing any code you should specify a set of naming conventions for your Java project, such as how to name classes and interfaces, how to name methods, how to name variables, how to name constants, etc. Do not assign random names instead use meaningful and self-explanatory names so that it is readable and can be later understood by yourself, your teammates, quality assurance engineers and by staff who will be handling maintenance of the project.

- Self-explanatory: a name must reveal its intention so everyone can understand and change the code easily. For example, the names dor str do not reveal anything; however the names daysToExpire or inputText do reveal their intention clearly. Note that if a name requires a comment to describe itself, then the name is not self-explanatory.

- Meaningful distinctions: If names must be different, then they should also mean something different. For example, the names a1 and a2 are meaningless distinction; and the names source and destination are meaningful distinction.

- Pronounceable: names should be pronounceable as naturally as spoken language because we are humans – very good at words. For example, which name can you pronounce and remember easily: genStamp or generationTimestamp?

Here are some general naming rules:

- Class and interface names should be nouns, starting with an uppercase letter. For example: Student, Car, Rectangle, Painter, etc.

- Variable names should be nouns, starting with a lowercase letter. For example: number, counter, birthday, gender, etc.

- Method names should be verbs, starting with a lowercase letter. For example: run, start, stop, execute, etc.

- Constant names should have all UPPERCASE letters and words are separated by underscores. For example: MAX_SIZE, MIN_WIDTH, MIN_HEIGHT, etc.

- Using camelCase notation for names. For example: StudentManager, CarController, numberOfStudents, runAnalysis, etc.

#2 Avoid Creating Duplicate Objects

It is often appropriate to reuse a single object instead of creating a new functionally equivalent object each time it is needed. Reuse can be both faster and more stylish. An object can always be reused if it is immutable.

String s = new String("silly"); // DON'T DO THIS!

// improved version

String s = "No longer silly";

You can often avoid creating duplicate objects by using static factory methods in preference to constructors on immutable classes. For example, the static factory method Boolean.valueOf(String) is almost always preferable to the constructor Boolean(String). The constructor creates a new object each time it’s called while the static factory method is never required to do so.

#3 Overidding the equals and hashcode Methods

You must override hashCode in every class that overrides equals and viceversa. Failure to do so will result in a violation of the general contract for Object.hashCode, which will prevent your class from functioning properly in conjunction with all hash-based collections, including HashMap, HashSet, and Hashtable.

Equals and hashcode contract:

- Whenever hascode and equals ared invoked on the same object more than once during an execution of an application, the hashCode method must consistently return the same integer. This integer need not remain consistent from one execution of an application to another execution of the same application.

- If two objects are equal according to the equals(Object) method, then calling the hashCode method on each of the two objects must produce the same integer result.

- It is not required that if two objects are unequal according to the equals(Object) method, then calling the hashCode method on each of the two objects must produce distinct integer results. However, the programmer should be aware that producing distinct integer results for unequal objects may improve the performance of hash tables.

#4 Always Override toString

While java.lang.Object provides an implementation of the toString method, the string that it returns is generally not what the user of your class wants to see. It consists of the class name followed by an “at” sign (@) and the unsigned hexadecimal representation of the hash code. It is recommended that all subclasses override this method.

Providing a good toString implementation makes your class much more pleasant to use. The toString method is automatically invoked when your object is passed to println, the string concatenation operator (+), or, as of release 1.4, assert.

Whether or not you decide to specify the format, you should clearly document your intentions.

#5 Encapsulate internal data and logic implementation

A well-designed module hides all of its implementation details, cleanly separating its API from its implementation. Modules then communicate with one another only through their APIs and are oblivious to each others’ inner workings. This concept, known as information hiding or encapsulation, is one of the fundamental tenets of software design.

Information hiding is important for many reasons, most of which stem from the fact that it effectively decouples the modules that comprise a system, allowing them to be developed, tested, optimized, used, understood, and modified individually. This speeds up system development because modules can be developed in parallel. It eases the burden of maintenance because modules can be understood quickly and debugged with little fear of harming other modules.

The rule of thumb is that you should make each class or member as inaccessible as possible. In other words, you should use the lowest possible access level consistent with the proper functioning of the software that you are writing.

For members (fields, methods, nested classes, and nested interfaces) there are four possible access levels, listed here in order of increasing accessibility:

- private— The member is accessible only inside the top-level class where it is declared.

- package-private— The member is accessible from any class in the package where it is declared. Technically known as default access, this is the access level you get if no access modifier is specified.

- protected— The member is accessible from subclasses of the class where it is declared and from any class in the package where it is declared.

- public— The member is accessible from anywhere.

#6 Make Classes Immutable

An immutable class is simply a class whose instances cannot be modified. All of the information contained in each instance is provided when it is created and is fixed for the lifetime of the object. The Java platform libraries contain many immutable classes, including String, the primitive wrapper classes, and BigInteger and BigDecimal. There are many good reasons for this: Immutable classes are easier to design, implement, and use than mutable classes. They are less prone to error and are more secure.

To make a class immutable, follow these five rules:

- Don’t provide any methods that modify the object (known as mutators or setters).

- Ensure that no methods may be overridden. This prevents careless or malicious subclasses from compromising the immutable behavior of the class. Preventing method overrides is generally done by making the class final, but there are alternatives that we’ll discuss later.

- Make all fields final. This clearly expresses your intentions in a manner that is enforced by the system. Also, it may be necessary to ensure correct behavior if a reference to a newly created instance is passed from one thread to another without synchronization, depending on the results of ongoing efforts to rework the memory model [Pugh01a].

- Make all fields private. This prevents clients from modifying fields directly. While it is technically permissible for immutable classes to have public final fields containing primitive values or references to immutable objects, it is not recommended because it precludes changing the internal representation in a later release (Item 12).

- Ensure exclusive access to any mutable components. If your class has any fields that refer to mutable objects, ensure that clients of the class cannot obtain references to these objects. Never initialize such a field to a client-provided object reference nor return the object reference from an accessor. Make defensive copies (Item 24) in contructors, accessors, and readObject methods.

Immutable objects are simple. An immutable object can be in exactly one state, the state in which it was created. If you make sure that all constructors establish class invariants, then it is guaranteed that these invariants will remain true for all time, with no further effort on your part or on the part of the programmer who uses the class.

Immutable objects are inherently thread-safe; they require no synchronization. They cannot be corrupted by multiple threads accessing them concurrently. This is far and away the easiest approach to achieving thread safety.

The only real disadvantage of immutable classes is that they require a separate object for each distinct value.

#7 Use Composition in place of Inheritance

Classes should achieve code reuse by their composition rather than inheritance from a base or parent class. Inheritance should only be used when subclass ‘is a’ superclass. Don’t use inheritance to get code reuse. If there is no ‘is a’ relationship, then use composition for code reuse.

Instead of extending an existing class, give your new class a private field that references an instance of the existing class and now the existing class becomes a component of the new one.

Reasons to Favour Composition over Inheritance:

- The fact that Java does not support multiple inheritances is one reason for favoring composition over inheritance in Java. Since you can only extend one class in Java, but if you need multiple features, such as reading and writing character data into a file, you need Reader and Writer functionality. It makes your job simple to have them as private members, and this is called Composition.

- Composition offers better test-ability of a class than Inheritance. If one class consists of another class, you can easily construct a Mock Object representing a composed class for the sake of testing. This privilege is not given by inheritance.

- Although both Composition and Inheritance allow you to reuse code, one of Inheritance’s disadvantages is that it breaks encapsulation. If the subclass depends on the action of the superclass for its function, it suddenly becomes fragile. When super-class behavior changes, sub-class functionality can be broken without any modification on its part.

- In the timeless classic Design Patterns, several object-oriented design patterns listed by Gang of Four: Elements of Reusable Object-Oriented Software, favor Composition over Inheritance. Strategy design pattern, where composition and delegation are used to modify the behavior of Context without touching context code, is a classical example of this. Instead of getting it by inheritance, because Context uses composition to carry strategy, it is simple to have a new implementation of strategy at run-time.

- Another reason why composition is preferred over inheritance is flexibility. If you use Composition, you are flexible enough to replace the better and updated version of the Composed class implementation. One example is the use of the comparator class, which provides features for comparison.

#8 Prefer interfaces to abstract classes

The most obvious difference between the two mechanisms is that abstract classes are permitted to contain implementations for some methods while interfaces are not.

Existing classes can be easily retrofitted to implement a new interface. All you have to do is add the required methods if they don’t yet exist and add an implements clause to the class declaration.

Interfaces are ideal for defining mixins. A mixin is a type that a class can implement in addition to its “primary type” to declare that it provides some optional behavior.

Interfaces allow the construction of nonhierarchical type frameworks.

#9 Validate Method Parameters

Most methods and constructors have some restrictions on what values may be passed into their parameters. For example, it is not uncommon that index values must be nonnegative and object references must be non-null. You should clearly document all such restrictions and enforce them with checks at the beginning of the method body.

If an invalid parameter value is passed to a method and the method checks its parameters before execution, it will fail quickly and cleanly with an appropriate exception. If the method fails to check its parameters, several things could happen. The method could fail with a confusing exception in the midst of processing. Worse, the method could return normally but silently compute the wrong result. Worst of all, the method could return normally but leave some object in a compromised state, causing an error at some unrelated point in the code at some undetermined time in the future.

public Double mod(Double num1) {

if (num1 <= 0)

throw new ArithmeticException("Modulus not positive");

...

}

#10 Return empty object or list instead of null

#11 Use BigDecimal or Long(convert to coins) for monetary calculations

There are, however, two disadvantages to using BigDecimal: It’s less convenient than using a primitive arithmetic type, and its slower. The latter disadvantage is irrelevant if you’re solving a single short problem, but the former may annoy you.

An alternative to using BigDecimal is to use int or long, depending on the amounts involved, and to keep track of the decimal point yourself. In this example, the obvious approach is to do all computation in pennies instead of dollars.

int itemsBought = 0;

int funds = 100;

for (int price = 10; funds >= price; price += 10) {

itemsBought++;

funds -= price;

}

System.out.println(itemsBought + " items bought.");

System.out.println("Money left over: "+ funds + " cents");

Just don’t use float or double for any calculations that require an exact answer. Use BigDecimal if you want the system to keep track of the decimal point and you don’t mind the inconvenience of not using a primitive type. Using BigDecimal has the added advantage that it gives you full control over rounding, letting you select from eight rounding modes whenever an operation that entails rounding is performed. This comes in handy if you’re performing business calculations with legally mandated rounding behavior. If performance is of the essence, if you don’t mind keeping track of the decimal point yourself, and if the quantities aren’t too big, use int or long. If the quantities don’t exceed nine decimal digits, you can use int; if they don’t exceed eighteen digits, you can use long. If the quantities exceed eighteen digits, you must use BigDecimal.

#12 Use libraries if you can

You don’t have to concern yourself with the details of how a method does its job (although you can study the documentation or the source code if you’re morbidly curious). A senior engineer with a background in algorithms spent a good deal of time designing, implementing, and testing this method and then showed it to experts in the field to make sure it was right. Then the library was beta tested, released, and used extensively by thousands of programmers for several years. No flaws have yet been found in the method, but if a flaw were to be discovered, it would get fixed in the next release. By using a standard library, you take advantage of the knowledge of the experts who wrote it and the experience of those who used it before you.

A second advantage of using the libraries is that you don’t have to waste your time writing ad hoc solutions to problems only marginally related to your work. If you are like most programmers, you’d rather spend your time working on your application than on the underlying plumbing.

A third advantage of using standard libraries is that their performance tends to improve over time, with no effort on your part. Because many people use them and because they’re used in industry-standard benchmarks, the organizations that supply these libraries have a strong incentive to make them run faster. For example, the standard multiprecision arithmetic library, java.math, was rewritten in release 1.3, resulting in dramatic performance improvements.

Libraries also tend to gain new functionality over time. If a library class is missing some important functionality, the developer community will make this shortcoming known.

Numerous features are added to the libraries in every major release, and it pays to keep abreast of these additions.

Every programmer should be familiar with the contents of java.lang, java.util, and, to a lesser extent, java.io. Knowledge of other libraries can be acquired on an as-needed basis.

There are many additions to the libraries in the 1.4 release. Notable additions include the following:

- java.util.regex— A full-blown Perl-like regular expression facility.

- java.util.prefs— A facility for the persistent storage of user preferences andprogram configuration data.

- java.nio— A high-performance I/O facility, including scalable I/O (akin to the Unixpoll call) and memory-mapped I/O (akin to the Unix mmap call).

- java.util.LinkedHashSet, LinkedHashMap, IdentityHashMap— Newcollection implementations.

Remember don’t reinvent the wheel. If you need to do something that seems reasonably common, there may already be a class in the libraries that does what you want. If there is, use it; if you don’t know, check. Generally speaking, library code is likely to be better than code that you’d write yourself and is likely to improve over time. This is no reflection on your abilities as a programmer; economies of scale dictate that library code receives far more attention than the average developer could afford to devote to the same functionality.

Occasionally, a library facility may fail to meet your needs. The more specialized your needs, the more likely this is to happen. While your first impulse should be to use the libraries, if you’ve looked at what they have to offer in some area and it doesn’t meet your needs, use an alternate implementation. There will always be holes in the functionality provided by any finite set of libraries. If the functionality that you need is missing, you may have no choice but to implement it yourself.

#13 Keep method parameter list short

Avoid long parameter lists. As a rule, three parameters should be viewed as a practical maximum, and fewer is better. Most programmers can’t remember longer parameter lists. If many of your methods exceed this limit, your API won’t be usable without constant reference to its documentation. Long sequences of identically typed parameters are especially harmful. Not only won’t the users of your API be able to remember the order of the parameters, but when they transpose parameters by mistake, their programs will still compile and run. They just won’t do what their authors intended.

There are two techniques for shortening overly long parameter lists. One is to break the method up into multiple methods, each of which requires only a subset of the parameters. If done carelessly, this can lead to too many methods, but it can also help reduce the method count by increasing orthogonality.

A second technique for shortening overly long parameter lists is to create helper classes to hold aggregates of parameters. Typically these helper classes are static member classes. This technique is recommended if a frequently occurring sequence of parameters is seen to represent some distinct entity. For example suppose you are writing a class representing a card game, and you find yourself constantly passing a sequence of two parameters representing a card’s rank and its suit. Your API, as well as the internals of your class, would probably be improved if you added a helper class to represent a card and replaced every occurrence of the parameter sequence with a single parameter of the helper class.

#14 Don’t use + to concenate strings

Using the string concatenation operator repeatedly to concatenate n strings requires time quadratic in n. To achieve acceptable performance, use a StringBuffer or StringBuilder in place of a String.

The difference in performance is dramatic. If numItems returns 100 and lineForItem returns a constant 80-character string, the second method is ninety times faster on my machine than the first.

Here is Oracle coding convention for more learning.

Intellij Hot Keys

Reformat Code

For Mac

⌥ ⌘ L

command + option + l

View Structure/Outline of Class

command + 7